Latest Posts

-

Project Mini Rack

Homelab

Homelab / ˈhoʊm.læb /

noun- A personal IT environment where individuals can experiment, learn and test various technologies.

- A server (or multiple) in your home where you host applications and virtualized systems for testing and development or for home and personal usage.

-

Deniable Encryption

I remember years ago when I first made a bootable Kali thumb drive (‘BackTrack’ back then) with encrypted peristence there was an option to create a sort of “self destruct” where if you typed a special password the drive would be erased rather than unlocked. Kali still offers this package: cryptsetup-nuke-password.

For some reason this ability still fascinates me. The option to self desctruct your stuff at a moment’s notice. I’ve always used Luks encryption on Linux devices but this specific “nuke password” setup doesn’t seem to exist much outside of Kali.

I continued reading and doing some experimenting on an older laptop and eventually decided to set my Framework 13 laptop up with deniable encryption. -

Small Modules Part 2

Natural Language Passphrases

Previously I talked about making small modules as an excuse to practice making tools and focus on making something that works well. I ended by saying I might try turning my ‘New-NaturalLanguagePassword Script’ in to a module.

I followed through on this not long after making that post with the first version being published in March and the most recent version being from May. If you want to skip right to checking it out I invite you to visit the Github page for it: ‘PSPhrase’

I wanted it to work on PowerShell v5.1 or newer, as well as Windows, Linux or Mac. I also wanted to leverage the ‘Sampler Module’ for the development.The Checklist

The first thing I like to do before I even open an IDE is to write down the goals for what this thing will do.

- Retain all existing configuration options from the script - Store word lists outside of the ps1 files - Allow for saving parameters/values as 'configuration'It would be easy enough to retain all of the existing parameters from the script as I already wrote them. In the script the adjective and noun wordlists are stored directly in the script as a hashtable with numbers as keys corresponding to dice rolls. This is an artifact of originally being based on diceware passwords. Since I’m doing my own thing now I decided that words can just be stored in simple text files with one word per line. This simplifies maintaining the wordlists and doesn’t constrain us to number ranges that align with 6 sided dice rolls.

Lastly, I wanted the end user to be able to save parameters and values as their default preference and have it persist between sessions. I know that ‘Paramter Default Values’ exist but I wanted to tailor this specifically for use with the module.What’s In a Name?

After listening to James Brundage talk about how important a good name/branding is for a module at the ‘24’ PowerShell Summit I really like to start with that. The script name is very long and quite literal. This module would be generating passwords. Not just passwords, but passphrases specifically. I like the idea of trying to incorporate ‘PS’ in to the name of the module if it works and it didn’t take very long to think of ‘PSPhrase’. This would mean I could name the primary functionGet-PSPhrase. That’s a lot easier to type thanNew-NaturalLanguagePassword. -

Small Modules

Size Doesn’t Matter

When I made my first PowerShell module it was mostly an experiment to see if I could understand how they worked. It was simply

tools.psm1and it lived in$HOME\Documents\PowerShell\Modules\Tools. That was all it took to move some function definitions out of my $Profile and in to a place where they would automatically load when called.

This would later grow in to a bigger collection of daily use functions for me and my team. I think at its peak there were around 44 functions, a couple of class definitions, and a few format files. Some of the modules you may be familiar with (e.g. ImportExcel, Posh-SSH, PowerCLI) can have upwards of 70 public functions. These modules represent tremendous effort and provide huge value to the greater PowerShell community. But not every module needs to be a monolith of code.Something that Kevin Marquette wrote about in his blog that I found very enouraging was the concept of building “micro modules.” Just because a module exports 1 or 2 functions doesn’t mean that it’s not valuable.

What I really like about a module is the ability to package up PowerShell functions, and any requirements, in to something that’s easy to install and use from the CLI. You can define classes and format files to go along with your module and the process of getting the module doesn’t change one bit. I could write a script to accomplish a lot of the same end result but here’s my general philosphy on PowerShell code:- Cmdlets are used in the CLI interactively to perform tasks

- Custom functions are used in the CLI interactively to perform tasks. They expand on existing cmdlets or fill a more custom need.

- Scripts are an orchestration/automation tool used to perform a set of actions, sometimes unattended

- Modules are a deliverable way to install functions (and functionality) on to a system

Maybe you’ve written a logging function that you want to incorporate in to a bunch of scripts you’ve written that might run unattended. It could be just 40 lines of code or so, and you could include it at the top of all your scripts. Maybe it lives in a separate .ps1 file and you dot source it at script execution. By comparison the latter option would make it easier to write changes to your logging module. OR you turn your logging function in to a module and publish it in a repository. Now it’s installable on any machine that you need it on, and you can use built in cmdlets for module management like

Update-Moduleto handle changes.If you’re writing PowerShell functions, and you’re putting them in to modules, you’re making tools. If that tool happens to do one thing really well that sounds like a pretty good tool to me. I don’t need a screwdriver that’s also a flashlight, a radio, and a spatula. I need a screwdriver. Preferably one that’s so good I never have to think about it. Go ahead and turn that function, or two, in to a shippable module.

-

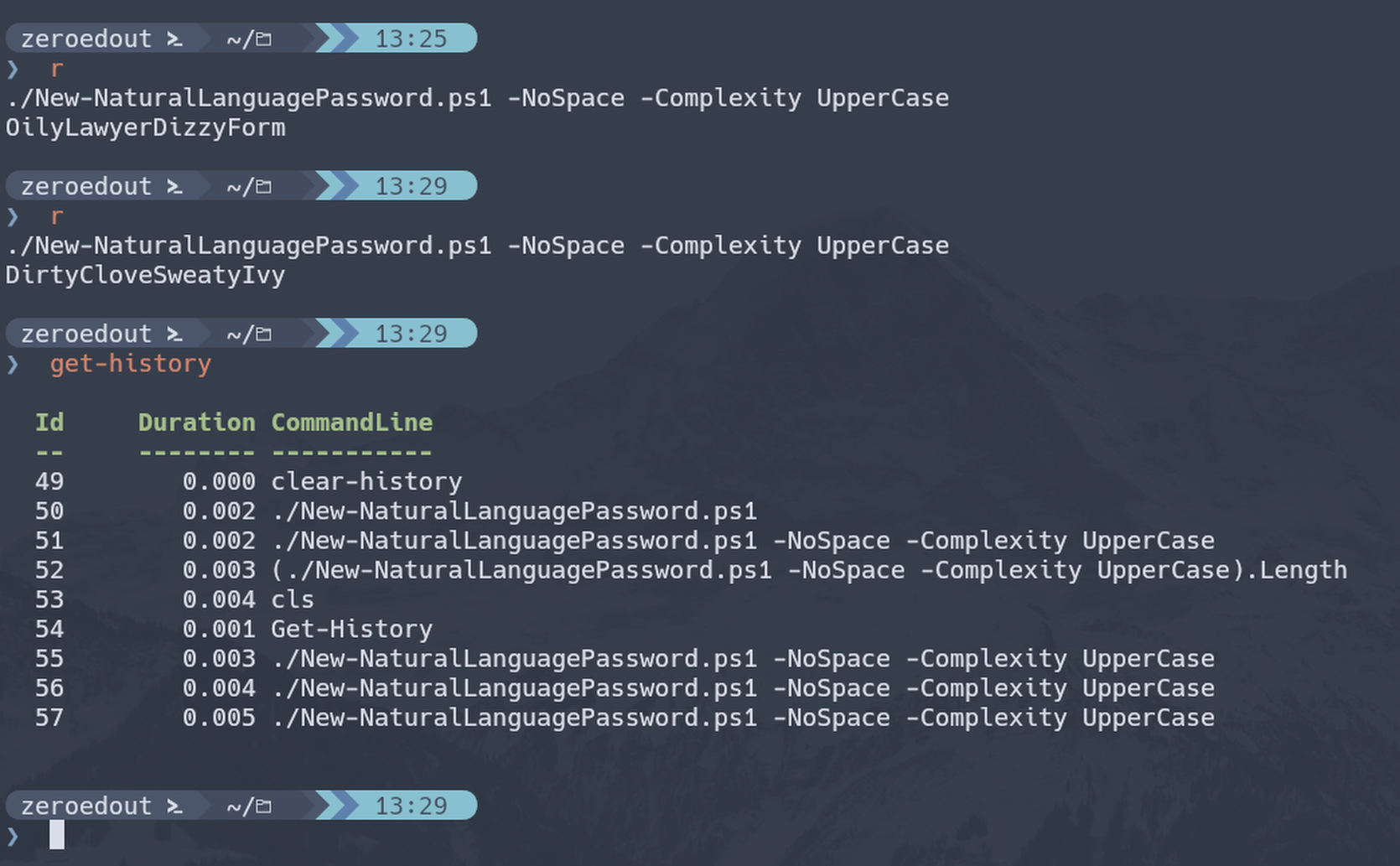

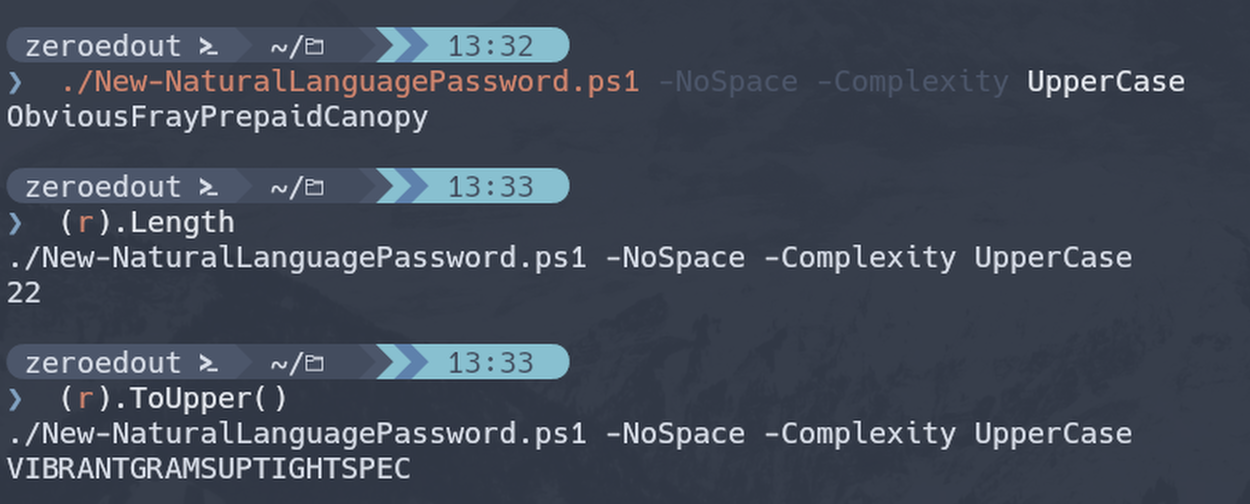

Quick Tip Invoke-History

The more time I spend living in the CLI the more I appreciate learning and adopting shorthand for operations. In Powershell the aliases for Where-Object and ForEach-Object have become second nature. Using up arrow to repeat the previous command and add more to it a near constant occurence. One situation I find myself in quite a bit however is running a command in Powershell, and then finding that based on the output I’d actually like to re-run that command an get the value from a property instead. On the keyboard I’ve been using for the past couple of years I would typically just hit up-arrow to repeat the last command,

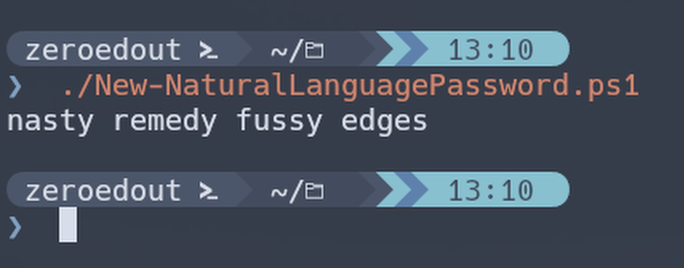

Hometo put my cursor on the beginning of the line, type a(thenEnda closing)then use dot notation to call the property I wanted the value of.As an example let’s say I run a script and see what the output looks like:

From this I observe the output and decide that I’d like to add some parameters. No problem, I’ll just up-arrow to repeat the command and add the parameters to the end:

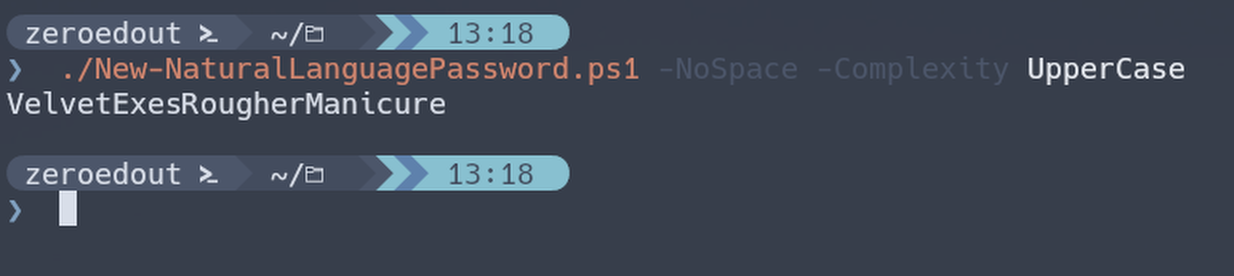

Great, but if I wanted to call a property/method on that output object I’d either have to pipe it to another cmdlet or wrap it in parenthesis and then call the property I want with the dot shortcut. I.e.:

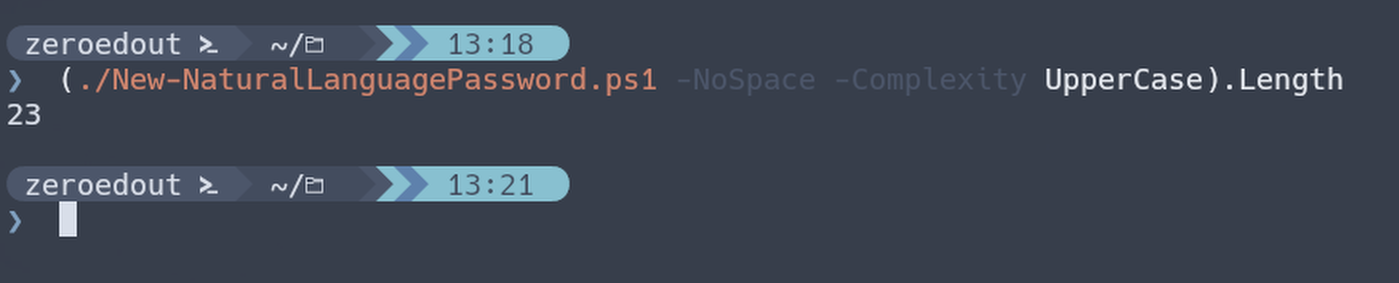

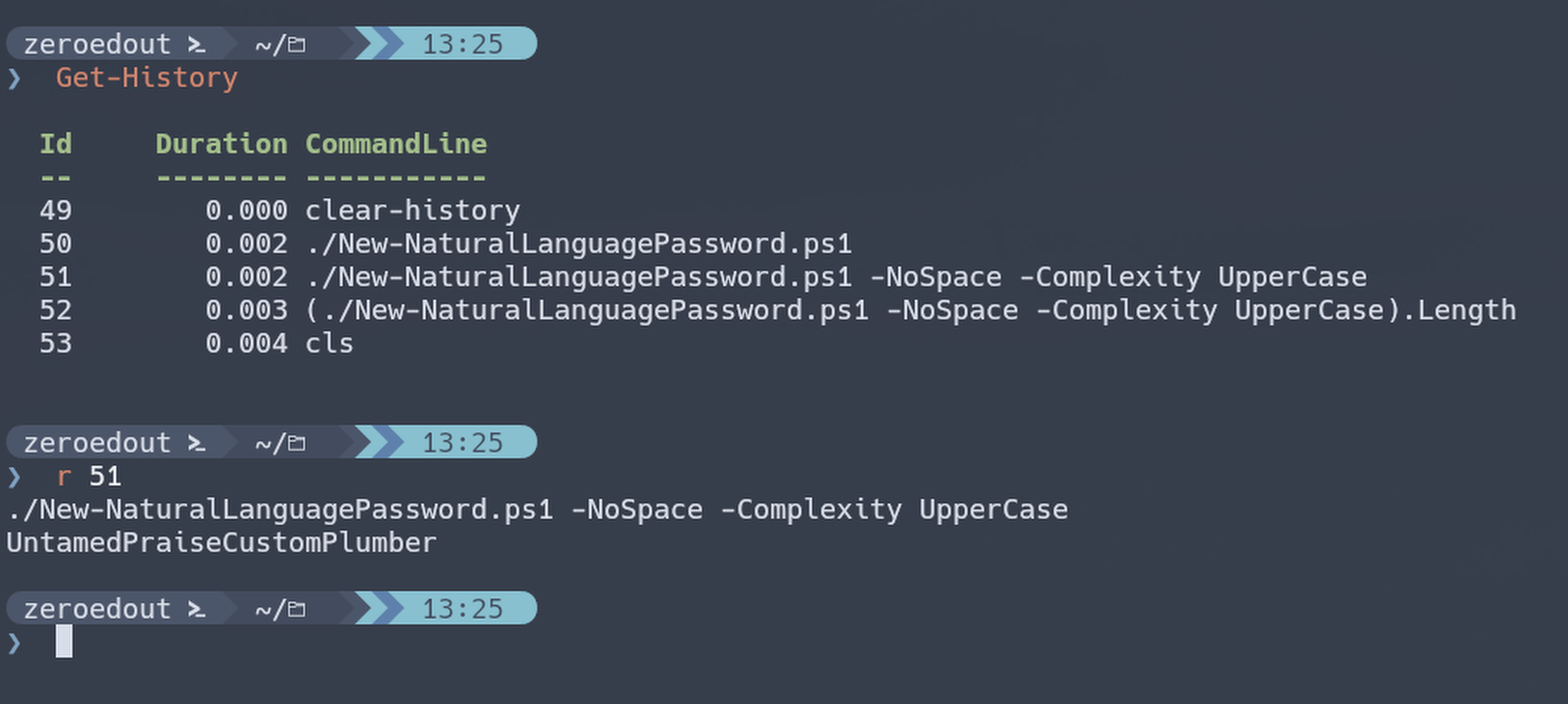

Seems simple enough. Home key, parenthesis, End key, parenthesis, dot, property name. But, my new keyboard is a 60% and doesn’t have dedicated arrow keys requiring that I hold another key to access a layer that has arrow keys on it.Invoke-History Has Entered the Chat

I remebered that along with Get-History there was Invoke-History and its alias of ‘r’. I’ve previously used this similarly to repeating commands in bash. Get-History, find the number of the command, then use

r <num>to repeat:

Through experimentation I found that callingrby itself repeats the previous command by default.

The next thing I tried was wrapping ‘r’ in an expression to see if I could then use the dot shortcut to retrieve a property or method:

And shazam! Now instead of needing arrow keys or Home/End keys I can start from a fresh prompt and type(r)following by whatever it was I wanted to do on the previous operation.

-

Powershell Summit 2024

I got the opportunity this week to attend the 2024 Powershell Summit in Bellevue Washington. If you have an opportunity to go to this, whether you’re brand new to Powershell or a steely-eyed veteran, I highly recommend it.

Beyond the individual sessions and workshops, the conversations that are had throughout the day in hallways, at tables and even at dinner are invaluable. I am still a bit overwhelmed but I managed to spend some time since the conference updating my ProtectStrings module. I wanted to clean up some of the code and also update it to be cross platform. After the conference I no longer view Powershell as strictly a Windows shell. Despite having Powershell 7.x installed on my Linux computer I still wrote most of my stuff on a Windows machine and never thought much about using it on Linux.

After what I saw at the conference I’ve got a renewed mindset focused on tool making and compatibility. I hope I get the chance to attend next year as well. -

SecretStore Module

SecretManagement module is a Powershell module intended to make it easier to store and retrieve secrets.

The secrets are stored in SecretManagement extension vaults. An extension vault is a PowerShell module that has been registered to SecretManagement, and exports five module functions required by SecretManagement. An extension vault can store secrets locally or remotely. Extension vaults are registered to the current logged in user context, and will be available only to that user (unless also registered to other users).

SecretManagement Module on Github

This is a really cool project and an awesome tool that Microsoft created. I see it get referred to a lot in different Powershell communities as a recommended solution for dealing with secrets in automation. I haven’t had any occasion to use it myself but I had often thought about writing a Powershell based password manager (until SecretManagement was released).

Relevant to my interests then if you want to just store secrets locally on your computer for use in scripts you’ll want to look at the SecretStore module.

SecretStore Module on GithubIt stores secrets locally on file for the current user account context, and uses .NET crypto APIs to encrypt file contents. Secrets remain encrypted in-memory, and are only decrypted when retrieved and passed to the user. This module works over all supported PowerShell platforms on Windows, Linux, and macOS.

Since theirs is cross platform and mine isn’t it’s probably different .NET in the backend, but the class is likely the same. For reference, in .NET it’s referred to by its RFC “Rfc2898DeriveBytes”. Since this is all publicly available on Github I thought I would search through the relevant CS code and try to understand how they did it differently. Here is the file I found where I believe PBKDF2 is happening:

Utils.csThere’s two sections that drew my attention. The first:

private static byte[] DeriveKeyFromPassword( byte[] passwordData, int keyLength) { try { using (var derivedBytes = new Rfc2898DeriveBytes( password: passwordData, salt: salt, iterations: 1000)) { return derivedBytes.GetBytes(keyLength); } } finally { ZeroOutData(passwordData); } }And the second:

-

Reset Expiration Clock

With more and more people working remotely there’s been a huge uptick in VPN usage. A lot of organizations have had to completely rethink some of their previous IT policies and procedures. Some things that used to be simple are now slightly more complicated.

One thing I wasn’t aware of, being so far removed from front line customer support at work, was that a lot of our user’s passwords were expiring while they were working remote. With an expired password, they couldn’t connect to the VPN, and without connecting to the VPN they couldn’t update their password. Unfortunately self-service password reset is not within our control because that’s the obvious answer. In some cases users were being told to come in to the nearest office so they could sign in to their computer on network, and then update their password. In other cases the help desk was resetting their password and dictating it to them over the phone. But, more often than not the help desk was asking for the user’s current password, and resetting it in AD to that. Obviously this is all really bad (especially that last one), but there wasn’t an available solution to stop this from happening. I read that there was a way with Powershell to essentially reset the password expiration clock on a user account to push the date out. If your password expired yesterday, and the domain policy was a 90 day password, then “resetting” it would change your expiration date to 90 days from now. This would make the user’s currently configured password valid again and prevent any form of password sharing. Then the user could manually initiate a password change once they were up and running again.

The pwdLastSet Attribute

The pwdLastSet Attribute in Active Directory contains a record of the last time the account’s password was set. Here is the definition from Microsoft:

“The date and time that the password for this account was last changed. This value is stored as a large integer that represents the number of 100 nanosecond intervals since January 1, 1601 (UTC). If this value is set to 0 and the User-Account-Control attribute does not contain the UF_DONT_EXPIRE_PASSWD flag, then the user must set the password at the next logon.”

There’s also the PasswordLastSet attribute which is just the pwdLastSet attribute but converted in to a DateTime object which is a lot more readable. But, if you want to make a change directly to an account’s Password Last Set it’s done via the pwdLastSet attribute. Knowing that it’s stored as a large integer number representing “file time” is important when we start making changes to it.

Updating the Attribute

Making changes to an Active Directory user account is often done with Set-ADUser and this is no different. If you look at the help info for Set-ADUser we can see that there are a lot of parameters representing attributes/properties we can change. The pwdLastSet attribute isn’t on the list however. There are plenty of forum hits and examples that reveal that the parameter we need to use is -Replace. The -Replace parameter accepts a hashtable as value so the syntax is pretty straight forward: The property name you want to update, and the value you want to replace it with.

Whether a user account’s password is expired or not, if you replace the pwdLastSet value with a 0 it effectively expires their password immediately. We’re clearing the slate here. The next step seems odd but we replace the pwdLastSet value with a -1. Since this is stored as a large integer value we’re telling it to set it to the largest number that can be stored in a large integer value. This would be some insane date out in the future except that it uses the domain password policy and caps it out at the default max password age. If that’s 90 days for example, then setting it to -1 puts the expiration date as 90 days out in the future from the execution of the command. The general consensus online is that both of these steps need to be taken: set it to 0, then -1. I haven’t done a deep dive on why, but if anyone has an explanation feel free to hit me up.

The Script

Seems simple enough then right? The script just needs to set the pwdLastSet attribute for a given user to 0 and then -1. One of the things I always ask when I’m writing Powershell for someone else’s consumption is “how” they want to be able to use this. Do they want to manually launch Powershell and execute the script by calling out its path? Do they want to be able to double-click a shortcut and have the script execute? Do they just want a function they can run as a CLI tool in Powershell?

In our case the help desk doesn’t spend a lot of time with Powershell and would prefer to just double-click a shortcut. I on the other hand prefer to run Powershell scripts from an open Powershell session, so I figured I would accommodate both.At its simplest the script really just needs to do this:

$User = Read-Host -Prompt "Enter username you wish to reset" Set-ADUser -Identity $User -Replace @{pwdLastSet = 0} Set-ADUser -Identity $User -Replace @{pwdLastSet = -1}However, I wanted the script to have some sanity checks, provide before and after info regarding the account’s password expiration, allow for alternate credential use and to run in a loop in case there were multiple accounts to target. I also wanted it to support running as the target of a shortcut, as well as an interactive script for users that would prefer to do it that way.

Script on Github -

Status Update

Hi all. Just wanted to provide a brief status update. It’s been a while since my last post and while I have been busy, and making frequent use of Powershell, I haven’t had anything novel that I felt like sharing.

I’ve still been using the Get-GeoLocation function quite a bit as well as another function I wrote called Get-WhoIsIP. It’s nothing crazy and primarily leverages “http://ipwho.is” API for results. I spend a lot of time using Powershell as a CLI and want a way to quickly look up IP addresses to determine ownership. Sometimes lots of IP addresses.

Primarily I would say that I’ve had a lot of occasion to help other people with their Powershell related needs. Here are some highlight topics I can think of:

- modified a Domain Join script to handle adding a computer to groups as part of the process.

- A script as part of a Scheduled Task that emails a CSV of specific types of accounts that need to change their password (based on age)

- A script for someone that needs to rename hundreds of files based on string text found within. Was previously a manual process, now thanks to Powershell (and Regex) something that used to take hours and hours each week takes a couple of seconds.

- A couple different Active Directory off-boarding scripts to consistently handle removing accounts.

- A script that resets an Active Directory user’s password expiry clock. Effectively changing the “Password Last Set” time to that of script execution.

- A series of scripts with Scheduled Task setups/executions, data output, and data collection. Heavily relying on DPAPI and AES encryption for data protection. The “psuedo code” is basically; execute script, save data, acquire data, alert on data. This also involves build scripts, deployment scripts, removal scripts and a few Scheduled Tasks. Was a lot of fun to write.

- Helped on a CTF that involved a lot of deobfuscating Powershell and finding flags within the code.

That’s about it. I’m still looking for the idea that’s going to inspire me to write another Powershell module. For now I’ll keep maintaining my team’s internal module, and my publicly available ProtectStrings module.

-

Get-GeoLocation

Getting GPS Coordinates From A Windows Machine

Since 2020 a lot of organizations have ended up with a more distributed workforce than they previously had. This means a lot of cloud services, VPNs, and company assets out in the wild. Some tools will let you build a “geo fence” around your infrastructure and block access to resources if the source is from a country other than your approved list. Let’s say you only have employees in the United States, you could specify in your cloud services that if anyone attempts to access your email from a country other than the United States, the authentication attempt would be refused.

This is generally accomplished through IP-based geolocation. We’ve all seen TV and movies where they get a person’s IP address and then magically pinpoint their location down to a couple of feet. In reality, that’s not true. If you want to see for yourself I used this website quite a bit during testing: IPlocation.net

The site will determine your apparent public IP address (note that a VPN could change this) and then get some publicly available information about the IP from a WHOis lookup. It will also query several Geo-IP databases and come up with GPS coordinates for your IP. Using the site above it comes up with a location that’s about 36 miles off. That’s certainly good enough to determine what country I am in, and maybe even what state I’m in, depending, but that’s about it. For preventing out-of-country login attempts that’s probably fine, but if I fire up NordVPN and specify Germany as my destination, IPlocation.net will now say I’m in Germany. That’s how most cloud destinations will view it as well.For the sake of argument, let’s say you work for an organization that allows remote work within the United States but you want to take a trip out of country and do some remote work. Maybe a VPN would be enough to convince all of your work resources that you were still in the United States and not throw any alarms. I wanted to more accurately determine a computer’s location and found that there really aren’t a lot of options out there, except for the Location Services that exist on most Windows computers. One good way to leverage this service is through Powershell and a .NET namespace.

GeoCoordinateWatcher

While searching the internet for how to get GPS coordinates out of a computer I kept running across the same code, or slight variations of it.

From StackOverflowAdd-Type -AssemblyName System.Device #Required to access System.Device.Location namespace $GeoWatcher = New-Object System.Device.Location.GeoCoordinateWatcher #Create the required object $GeoWatcher.Start() #Begin resolving current locaton while (($GeoWatcher.Status -ne 'Ready') -and ($GeoWatcher.Permission -ne 'Denied')) { Start-Sleep -Milliseconds 100 #Wait for discovery. } if ($GeoWatcher.Permission -eq 'Denied'){ Write-Error 'Access Denied for Location Information' } else { $GeoWatcher.Position.Location | Select Latitude,Longitude #Select the relevent results. }From Microsoft

# Time to see if we can get some location information # Required to access System.Device.Location namespace Add-Type -AssemblyName System.Device # Create the required object $GeoWatcher = New-Object System.Device.Location.GeoCoordinateWatcher # Begin resolving current locaton $GeoWatcher.Start() while (($GeoWatcher.Status -ne 'Ready') -and ($GeoWatcher.Permission -ne 'Denied')) { #Wait for discovery Start-Sleep -Seconds 15 } #Select the relevent results. $LocationArray = $GeoWatcher.Position.LocationFrom Github

function Get-GeoLocation{ try { Add-Type -AssemblyName System.Device #Required to access System.Device.Location namespace $GeoWatcher = New-Object System.Device.Location.GeoCoordinateWatcher #Create the required object $GeoWatcher.Start() #Begin resolving current locaton while (($GeoWatcher.Status -ne 'Ready') -and ($GeoWatcher.Permission -ne 'Denied')) { Start-Sleep -Milliseconds 100 #Wait for discovery. } if ($GeoWatcher.Permission -eq 'Denied'){ Write-Error 'Access Denied for Location Information' } else { $GL = $GeoWatcher.Position.Location | Select Latitude,Longitude #Select the relevent results. $GL = $GL -split " " $Lat = $GL[0].Substring(11) -replace ".$" $Lon = $GL[1].Substring(10) -replace ".$" return $Lat, $Lon } } # Write Error is just for troubleshooting catch {Write-Error "No coordinates found" return "No Coordinates found" -ErrorAction SilentlyContinue } } $Lat, $Lon = Get-GeoLocationIn my preferred editor (Visual Studio Code) I copied over some of these and started experimenting. It’s clear they’re using a .NET namespace “System.Device” in order to create an instance of a “GeoCoordinateWatcher” object. I looked this object class up so I could read more about it straight from Microsoft.

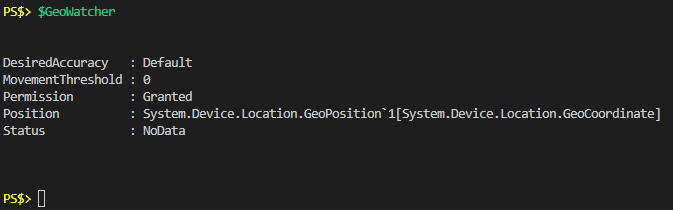

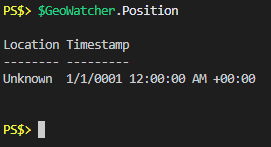

I always like stepping through code line by line when I’m writing it and exploring what properties and methods the objects I’m dealing with have. If we execute the first couple lines we’ve seen in all of these examples we’ll have an object we can play with:

Add-Type -AssemblyName System.Device $GeoWatcher = New-Object System.Device.Location.GeoCoordinateWatcherThen simply call the new object and just see what it says:

From this we can see that my “Permission” property is “Granted”, “Status” is “NoData” and “Position” looks like it contains some additional objects. I can infer from the code examples I pointed out that there must be some occasions where “Permission” is actually “Denied” but nobody seems to talk about that so for now I’ll just be thankful I’m not in that boat and move on. What’s in the “Position” property?

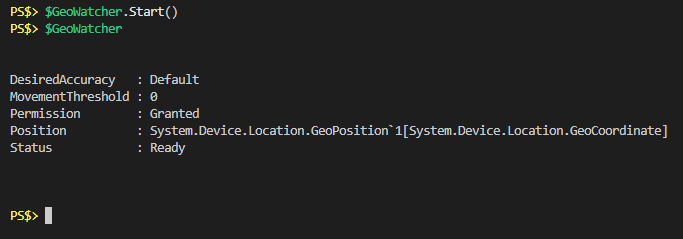

The next step seems like calling the “Start” method on the object.

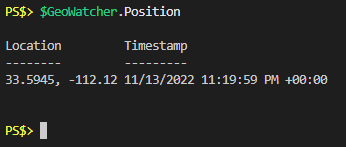

I only waited a second or two before calling the object again and as you can see the “Status” was “Ready” pretty quickly. If I look at the “Position” property again we see that it actually has value now:

Wow, awesome. GPS coordinates and a timestamp. The GPS coordinates are returned in decimal degrees, which can be copy and pasted right in to Google maps to show you the location.Accuracy

Where is Location Services getting this information from? Well, it’s not 100% clear from just this object class alone. The “System.Device” namespace page has this to say about it:

Location information may come from multiple providers, such as GPS, Wi-Fi triangulation, and cell phone tower triangulation.I’ve read similar remarks on forums regarding this service, but that’s about as deep as it goes. Through my own testing it seems that if there are no radios (WiFi, GPS, Cellular) on the computer, it will do some type of geolocation look up based on the apparent public IP address. However, if even a USB WiFi dongle is plugged in the accuracy of the returned GPS coordinates can get as high as within a few yards. I haven’t gotten to test on a computer with a cellular card in it but I assume it would be similarly accurate.

String Manipulation: The Results

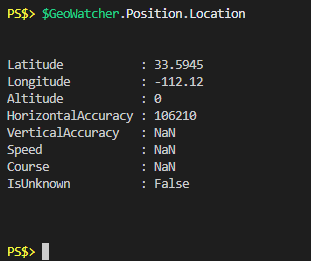

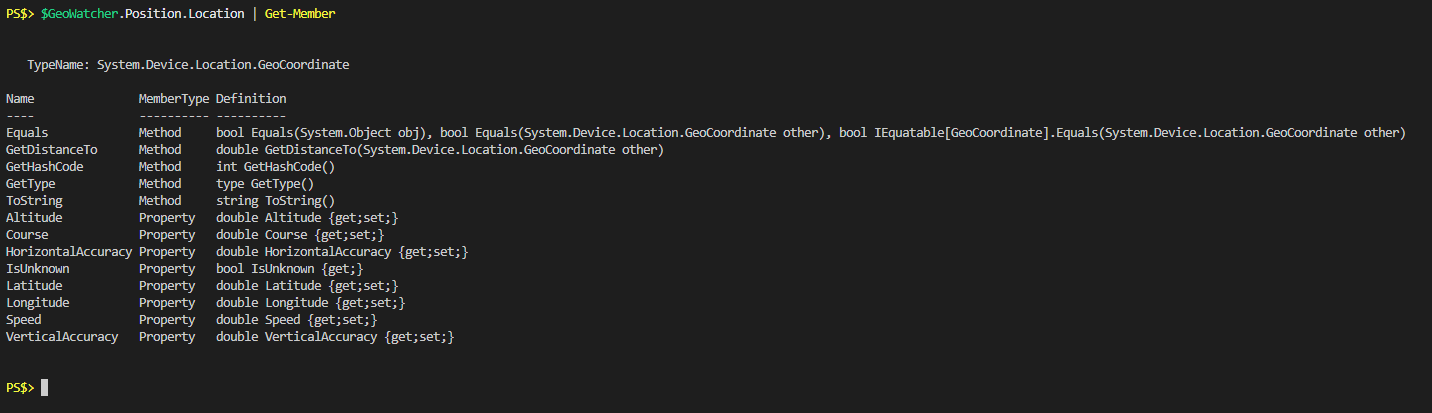

One thing I noticed in most of the examples of the code was that people were specifically calling the longitude and latitude of the “Position” property. Remember above that when calling the “Position” property it returned a location and a timestamp, and the location was shown as decimal degrees. Call the “Location” property of the “Position” property and you get a bigger picture:

Now we can see that when viewing “Position” property there’s some object formatting taking place to show us the latitude and longitude as comma separated numbers. In actuality the “Location” property itself has 8 properties and we’re really only interested in the “Latitude” and “Longitude” ones. There’s examples out there of different ways to manipulate these to get what you want out of them, but I’m always a big fan of piping an object to Get-Member to see what’s available:

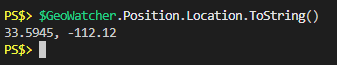

I see a “ToString” method, I wonder what that looks like:

I see a “ToString” method, I wonder what that looks like:

Great, I’m done. They did all the work for me and all those other examples out there of using Select-Object, or splitting, or whatever can be ignored.Permissions

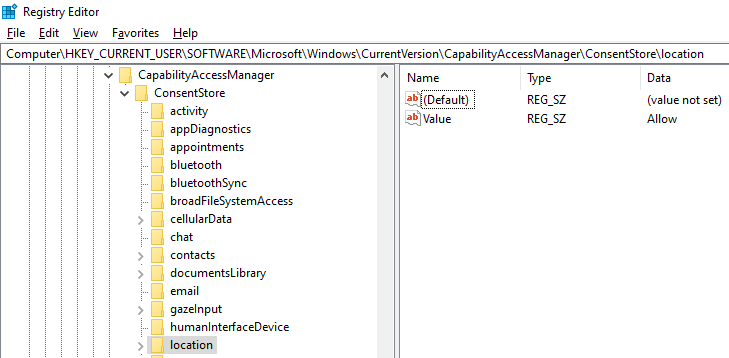

One thing you see in every example is a reference to the “Permission” property possibly being listed as “Denied”. I was able to find a couple of computers where this was the case and wanted to understand what was controlling that and how I could possibly overcome it since no one seemed to talk about it in the posts surrounding the above code examples.

The short story is that it depends on whether or not Location Services is being allowed, at both the computer configuration and user configuration level. I make that distinction because there’s a registry key in both the LocalMachine and CurrentUser hive that applies to this. The path is here:Software\Microsoft\Windows\CurrentVersion\CapabilityAccessManager\ConsentStore\location\Value

At the computer configuration level if this is set to “Deny” then Location Services won’t work and you’ll need admin rights to change that registry key. If the computer configuration is set to “Allow” but the current user configuration is set to “Deny” then the registry key can be changed without administrative privilege.

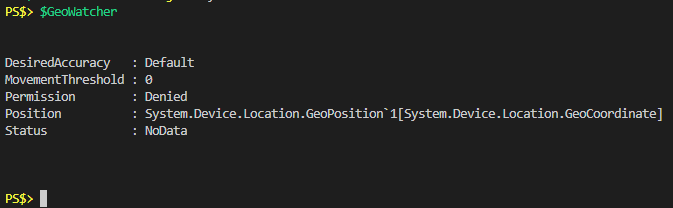

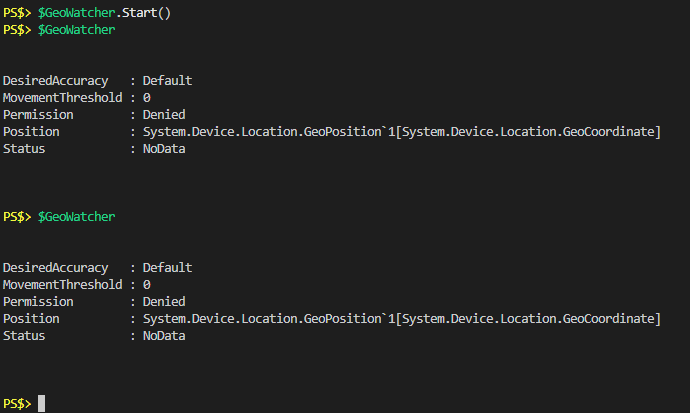

To show you what I mean, I’ve set my current user location registry key to “Deny” and recreated my GeoCoordinateWatcher object:

As you can see the “Permission” property shows “Denied”. Calling the “Start” method on the object does not throw an error, and the “Status” property never changes from “NoData”.

As you can see the “Permission” property shows “Denied”. Calling the “Start” method on the object does not throw an error, and the “Status” property never changes from “NoData”.

The while loop in all of the code examples is reliant on “Permission” being equal to something other than “Denied”, so in my current state the script would flow right through the while loop and move on, possibly writing some kind of error to host.What if instead we checked to see what the registry key’s value was before trying to start the process? Then if we have the appropriate permissions, change the value, do the work, and change it back when we’re done.

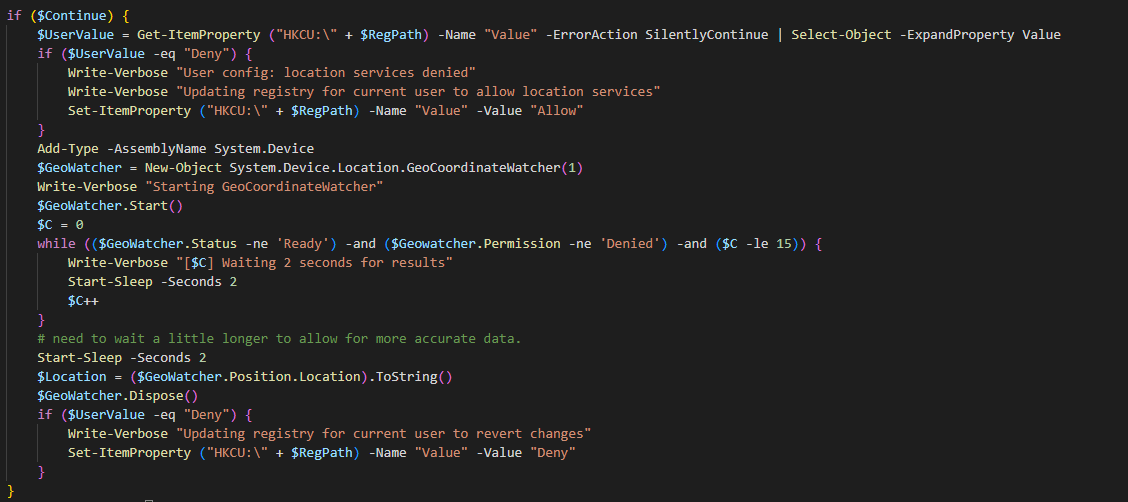

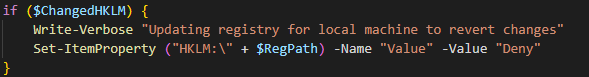

Let’s look at what I would do instead and then talk about it.Code

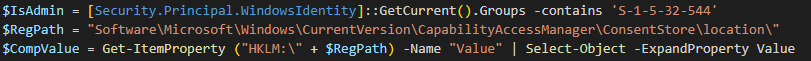

$IsAdmin = [Security.Principal.WindowsIdentity]::GetCurrent().Groups -contains 'S-1-5-32-544' $RegPath = "Software\Microsoft\Windows\CurrentVersion\CapabilityAccessManager\ConsentStore\location\" $CompValue = Get-ItemProperty ("HKLM:\" + $RegPath) -Name "Value" | Select-Object -ExpandProperty Value if ($CompValue -ne "Allow" -and $IsAdmin) { Write-Verbose "Admin rights present. Editing registry to allow location services" Set-ItemProperty ("HKLM:\" + $RegPath) -Name "Value" -Value "Allow" $ChangedHKLM = $true $Continue = $true } elseif ($CompValue -eq "Allow") { Write-Verbose "Local machine allows for location services" $Continue = $true } else { Write-Verbose "No admin rights and location services denied at machine level" $Location = "Permission" $Continue = $false } if ($Continue) { $UserValue = Get-ItemProperty ("HKCU:\" + $RegPath) -Name "Value" -ErrorAction SilentlyContinue | Select-Object -ExpandProperty Value if ($UserValue -eq "Deny") { Write-Verbose "User config: location services denied" Write-Verbose "Updating registry for current user to allow location services" Set-ItemProperty ("HKCU:\" + $RegPath) -Name "Value" -Value "Allow" } Add-Type -AssemblyName System.Device $GeoWatcher = New-Object System.Device.Location.GeoCoordinateWatcher(1) Write-Verbose "Starting GeoCoordinateWatcher" $GeoWatcher.Start() $C = 0 while (($GeoWatcher.Status -ne 'Ready') -and ($Geowatcher.Permission -ne 'Denied') -and ($C -le 15)) { Write-Verbose "[$C] Waiting 2 seconds for results" Start-Sleep -Seconds 2 $C++ } # need to wait a little longer to allow for more accurate data. Start-Sleep -Seconds 2 $Location = ($GeoWatcher.Position.Location).ToString() $GeoWatcher.Dispose() if ($UserValue -eq "Deny") { Write-Verbose "Updating registry for current user to revert changes" Set-ItemProperty ("HKCU:\" + $RegPath) -Name "Value" -Value "Deny" } } if ($ChangedHKLM) { Write-Verbose "Updating registry for local machine to revert changes" Set-ItemProperty ("HKLM:\" + $RegPath) -Name "Value" -Value "Deny" } [PSCustomObject]@{ Computer = $Env:COMPUTERNAME Location = $Location NetAdapter = (Get-NetAdapter -Physical | Where-Object {$_.Status -eq "Up"} | Select-Object -ExpandProperty Name) -Join ',' }Let’s talk through this.

The first line is a method for determining if the current running user is in the local Administrators group. Then we save the bulk of a registry path for use later, and then check the HKLM value in the registry to see if Location Services are allowed.

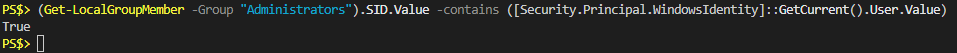

When I tested this on several Domain joined computers it worked great (and was new to me) but as I write this on my personal computer I see some interesting behavior. My local account (a Microsoft account) is in the local Administrators group, but that first line returns false. Checking manually in the GUI it shows that I am in fact in that group, but the “Groups” property from that code doesn’t show that I’m in that group. So I flipped the logic around and instead got the members of that group to see if it contains the SID of the current user, and that returns true.

Ok, next section.

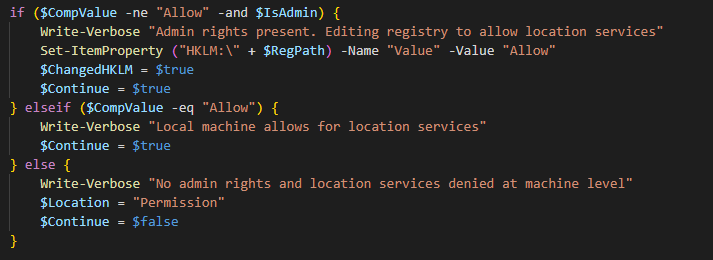

A little if/elseif/else action here. If Location Services is currently not being allowed at the computer level, and we’re running as admin, then change the registry and define a couple of variables. Else, if the value is already set to “Allow” then just define a variable. Else, finally, if we’re not running as admin then define that same variable as “False”. If that were the case, then this next section that checks that variable first, would be skipped.

This is where we do the actual work. We’ve checked if Location Services is allowed at the computer level, and if that worked then “Continue” will be true. We start off by essentially doing the same registry check but for the Current User hive. If Location Services isn’t being allowed then we’ll change it to allow. Then we add our .NET namespace and create out GeoCoordinateWatcher object. Note the “(1)” at the end of that. This denotes that it should be created with “High Accuracy” mode. Since we’re only going to be leveraging it for a few seconds, I see no downside to this.Then we start the process and I also start a counter by defining “$C” as zero. No one else had this in their code, but I wasn’t sure what the maximum potential amount of time this process might take was, and I didn’t want to accidentally create an infinite loop so my while loop has 3 conditions. The final condition, the counter, must be less than or equal to 15. With the Start-Sleep statement within the loop set to 2 seconds this means the maximum amount of time this loop could go on for is approximately 30 seconds.

I then found through some testing that if you just immediately go from “Ready” to checking for the location that it may not actually be ready. Unclear what’s happening in the background, but if you just wait 2 more seconds it seems to allow for enough time. Then I take the positional data and use its own “ToString” method to save the GPS coordinates to a variable. Dispose of the GeoWatcher object and if the user’s registry value was changed by this script, change it back. The next section does the same thing for the computer registry hive.

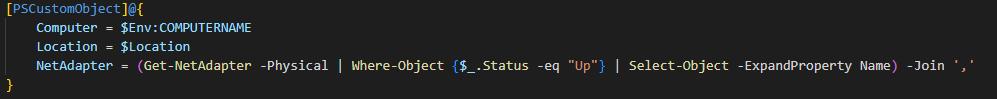

Then finally the last section.

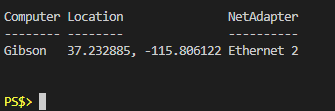

This just creates, and returns, a PSCustomObject with three properties: The current computer name, the GPS coordinates, and the name of the connected network adapters. Let’s execute all of this and see what that looks like.

Great! Now we have the name of the computer, some pretty accurate looking GPS coordinates, and the network adapter(s) that were present at the time. I included this because the presence of WiFi seems to be a pretty big factor in how accurate the GPS coordinates are and I thought it might be nice for reference.The reason it was written the way it was is because I figured more often than not I’m going to be running this against a remote computer and might want to pass this code as a script block. With that in mind, this is all collected together as a function called Get-GeoLocation, which has a parameter called “ComputerName” for specifying a remote computer you wish to run it against. This has only been tested in one Active Directory environment so far, but the code is available on Github in case you want to play around with it on your own.

GitHub Get-GeoLocationClosing Thoughts

When I started down this rabbit hole of trying to reliably determine a computer’s location I really did not want to use Powershell to do it. I know I have a tendency to use Powershell for everything and I wanted to use tools that we already had available to us. Unfortunately everything seems to rely on Geo-IP databases which return fairly inaccurate results. This was also a fun exercise in taking “found in the wild” code a step further and hydrating it with some more error handling, and features.

Remember to check out Microsoft’s documentation when you can, pipe to Get-Member, and just explore in general. It’s interesting what you’ll find. -

Quick Tip on ParameterSetNames

I was writing a new function today. Oddly enough I was actually re-writing a function today and hadn’t realized it. Let me explain.

Story Time

About a half dozen times a month I find myself inspecting a remote computer and invariably the question comes up “how long has this computer been up?” I always find myself looking up how to get computer uptime in Powershell and I always look for Doctor Scripto’s blog post where he shows a one-liner that can tell you a computer’s uptime:

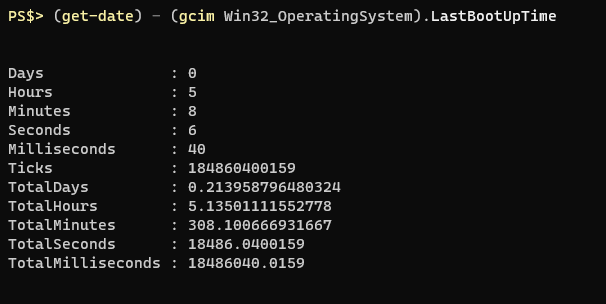

PS$> (get-date) – (gcim Win32_OperatingSystem).LastBootUpTimeThe output of the command looks like this:

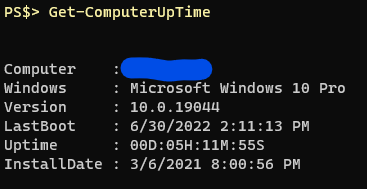

Totally sufficient. I’m usually in a remote Powershell session anyway and just copy/paste the command. I decided today that I would finally just buckle down and write a function to incorporate in my every day module that would allow me to query my own computer’s uptime as well as a remote computer. Since the info is also in the Win32_OperatingSystem class I thought I would include the date of install as this is often the “imaged” date at work which can be helpful to know as well.

Within about 10 minutes I had a rough draft of the function and it was successful in returning the information I wanted.

I clicked “Save As…” in VS Code and navigated to the folder where the other functions were stored only to see that there was already a function of the same name there dated just two months ago. I opened it to look at the contents and found that I had indeed written it, with some inspiration borrowed from online (and credited as such) but I didn’t actually like the way it was returning info. It also had a bit of a problem when it came to handling pipeline input. I relocated the “old” one and saved the new one in its place.

Tip of the day: ParameterSetName=”none”

The beginning of my new function was looking a lot like most of my new functions; I had declared cmdletbinding and a parameter block. Originally I had a “ComputerName” parameter to allow for specifying a computer other than the one you’re on, and then I thought to add a “Credential” parameter in case I needed to provide different credentials for the remote computer.

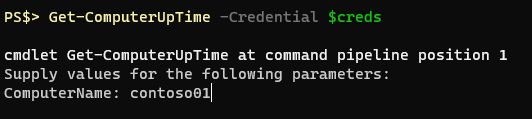

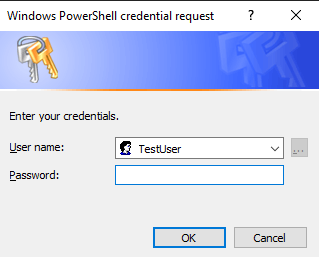

[Cmdletbinding()] Param ( [Parameter(Mandatory=$false,ValueFromPipeline=$true,Position=0)] [String]$ComputerName, [Parameter(Mandatory=$false,Position=1)] [pscredential]$Credential )The rest of the function was going fine but I had the thought that I wanted the function to require the “ComputerName” parameter if the Credential parameter was provided. I knew about parametersets and grouping parameters together by a name, but I wasn’t sure how this might work. I read a couple quick blog posts and saw that if I cast the “ComputerName” parameter as Mandatory and put it in the same parameterset as the “Credential” parameter it would work, but then it was ALWAYS asking for a value for the “ComputerName” parameter. In other functions where I had multiple parametersets this was handled by specfying a “DefaultParameterSetName” in the CmdletBinding definition. However, this function was only ever going to have one parameterset name. My first thought was “what if I just set it to something that doesn’t exist?”

I quickly changed the first part of my function to look like this, and it worked!

[Cmdletbinding(DefaultParameterSetName="none")] Param ( [Parameter(ParameterSetName="Remote",Mandatory=$false,ValueFromPipeline=$true,Position=0)] [String]$ComputerName, [Parameter(ParameterSetName="Remote",Mandatory=$false,Position=1)] [pscredential]$Credential )Now when calling the function with no arguments it does not ask for a “ComputerName” and returns information about the current computer. If I call the function with the “Credential” parameter but don’t supply a “ComputerName” value it will ask for one.

It’s a pretty unlikely scenario that I, or someone else, would call the function with JUST the “Credential” parameter and not the computer name, this was more of a proving ground type situation.

If you’d like to see the function in its entirety you can find it on my Github

That’s all for now. Until the next light bulb moment.

-

ProtectStrings. A Module Story

I’ve had an itch lately to do something with AES encryption in Powershell. I’ve tossed around the idea of building a password manager in Powershell, but I get so focused on the little details that I lose sight of the fact that Microsoft pretty much has that covered.

I’ve used ConvertTo/From-SecureString quite a bit for string management in scripts and I’ve even gone as far as creating a small module that allows me to save a credential using DPAPI encryption to an environmental variable for recall later. I have yet to do anything with AES encryption however. I have some scratch sheets saved regarding a more robust password manager module, but nothing has really come of it yet.

Two things happened recently to change some of this: I found myself with a need to encrypt some strings locally and save them to a file, and a coworker went down the rabbit hole of protecting PS credential objects with AES encryption. What follows is the story of ProtectString: A Powershell Module.

Table Of Contents

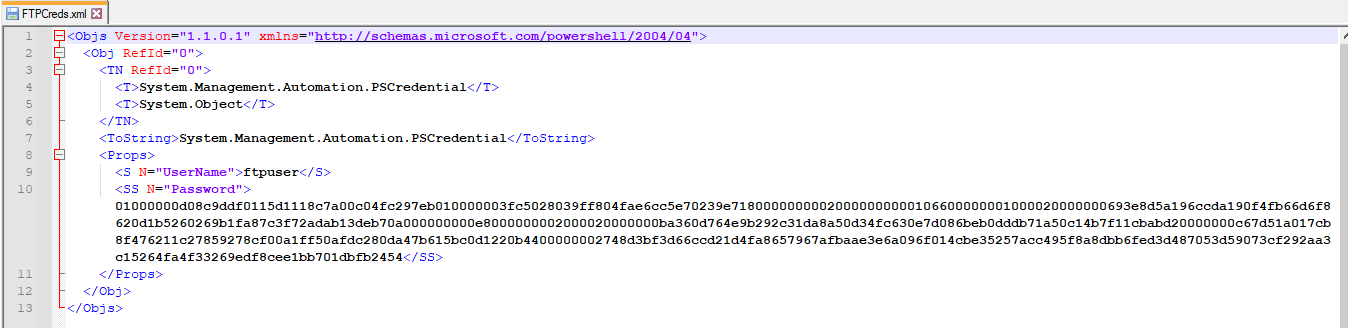

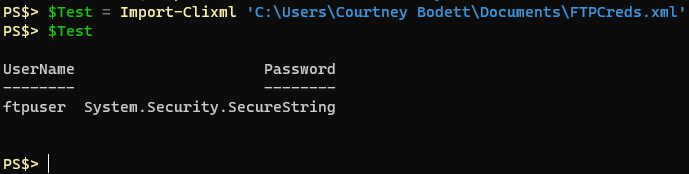

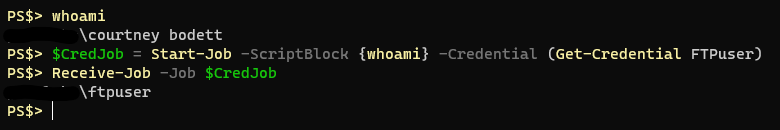

Protecting Credentials with AES

There are a lot of good articles out there on how to save Powershell credentials securely for use in scripts. Heck, I wrote one. For the sake of this post though let’s go over, specifically, the use of AES encryption for saving Powershell Credentials.

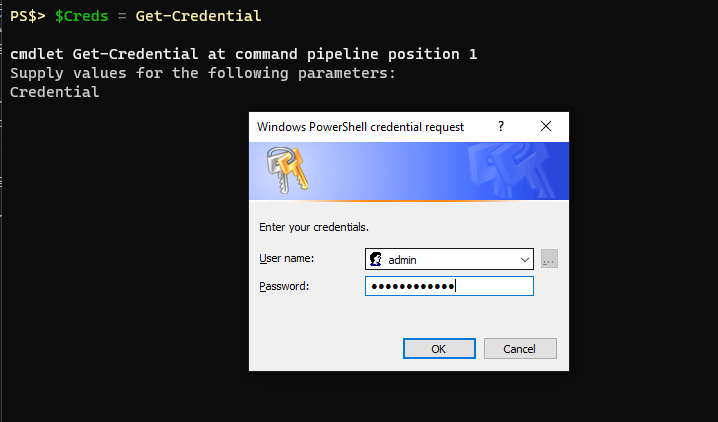

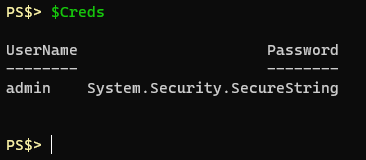

SecureString objects in Powershell are protected by Microsoft’s DPAPI encryption. Here’s the Wikipedia Page on DPAPI. Essentially the unique encryption key is derived from the user running it on the system they’re on. If a different user of the same computer tried to decrypt it using DPAPI it would fail. Move the DPAPI encrypted cipher text to another machine and try to decrypt it and it will fail as well. Not very portable, but it’s very convenient on the system you’re on. Using the Get-Credential cmdlet will yield a pop-up window where you can securely supply the password.

Get-Credential TestUser UserName Password -------- -------- TestUser System.Security.SecureString

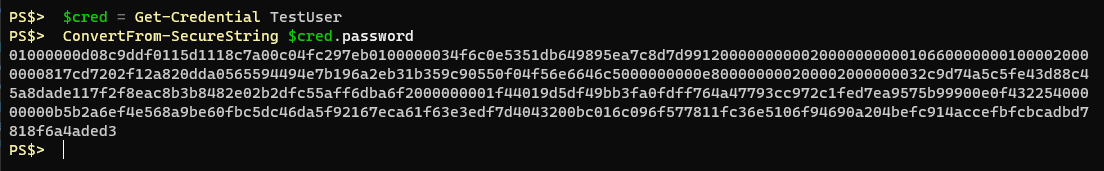

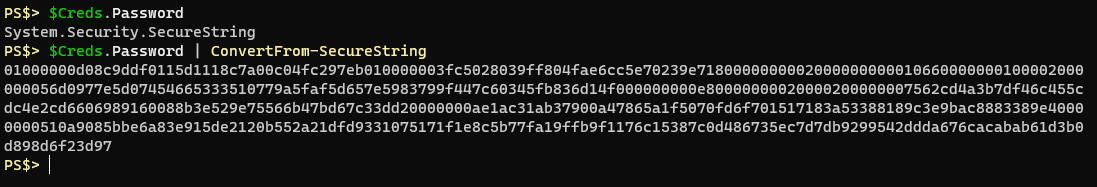

Using the Get-Credential cmdlet or the Read-Host cmdlet with the -AsSecureString parameter will net you a property that shows as a System.Security.SecureString object. If you were to convert from that SecureString object you would be left with cipher text:

Now, you could save this text to a file, and then read the text from a file later and convert it to a SecureString object and go about your business, but as I explained earlier it’s not portable because of the way DPAPI encryption works.Consider the following:

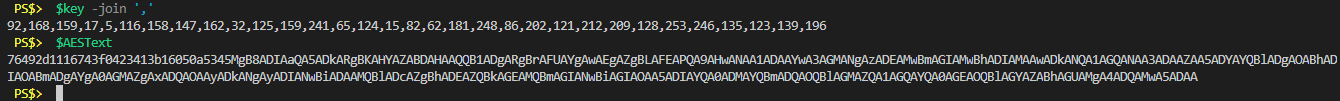

# Make a new byte array of 32 bytes $Key = New-Object System.Byte[] 32 # Randomize each byte value using the .NET random number generator [Security.Cryptography.RNGCryptoServiceProvider]::Create().GetBytes($Key) # Get the text you want to protect $Secret = Read-Host -AsSecureString # Convert it from a SecureString object to cipher text using our AES 256-bit key $AESText = ConvertFrom-SecureString $Secret -Key $KeyWe create a new completely random 32 byte (256-bit) array to use as a key with AES encryption. We get text input from the user, stored as a SecureString object which is automatically protected in memory by DPAPI encryption. We then convert it from a SecureString object to just cipher text and we provide that 32 byte key. We end up with Base64 encoded cipher text like this:

76492d1116743f0423413b16050a5345MgB8ADIAaQA5ADkARgBKAHYAZABDAHAAQQB1ADgARgBrAFUAYgAwAEgAZgBLAFEAPQA9AHwANAA1ADAAY wA3AGMANgAzADEAMwBmAGIAMwBhADIAMAAwADkANQA1AGQANAA3ADAAZAA5ADYAYQBlADgAOABhADIAOABmADgAYgA0AGMAZgAxADQAOAAyADkANg AyADIANwBiADAAMQBlADcAZgBhADEAZQBkAGEAMQBmAGIANwBiAGIAOAA5ADIAYQA0ADMAYQBmADQAOQBlAGMAZQA1AGQAYQA0AGEAOQBlAGYAZAB hAGUAMgA4ADQAMwA5ADAANow, we can save that to a file AND save our $Key variable to a file for use on the same system or a different system.

# Save the Key to a file Out-File -FilePath "C:\Scripts\AESKey" -InputObject $Key # Save the encrypted text to a file Out-File -FilePath "C:\Scripts\TopSecret.txt" -InputObject $AESTextYou can move those files to another computer, for another user even, and they can be used in this way.

# Import my AES Key $Key = Get-Content "C:\Scripts\AESKey" # Import my encrypted text $AESText = Get-Content "C:\Scripts\TopSecret.txt"Here’s a picture of what their values look like (common joined the key for easier viewing)

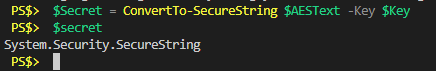

We can use that AES Key to convert our encrypted text back in to a SecureString object.

# Convert the encrypted text in to a SecureString object $Secret = ConvertTo-SecureString $AESText -Key $Key

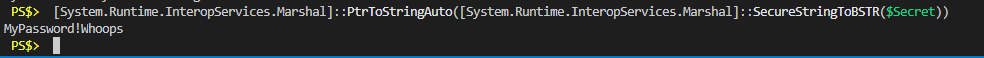

That SecureString object is now protected in memory with DPAPI encryption again. To convert it to plain text we have to use some .NET methods. Here’s a rather long one-liner that accomplishes this.

# Convert a SecureString object to plain text [System.Runtime.InteropServices.Marshal]::PtrToStringAuto([System.Runtime.InteropServices.Marshal]::SecureStringToBSTR($Secret))

There it is. That’s how you can use a random key to convert SecureString objects to AES protected text, transport them somewhere, and decrypt them. But, you can’t just leave that AES key laying around anywhere. That would be like locking all of your valuables up in your house behind the strongest locks you could get, and then leaving the key laying under the doormat. We don’t protect our password managers like this, why should it be any different for credentials in Powershell?

Generating An AES Key

One thing I never liked about the above method was that you really need to save that AES key somewhere because it’s impossible to remember or produce again. One day while tumbling through Github I found a mobule by greytabby called PSPasswordManager. What particularly caught my attention was the class definition within for “AESCipher.” Here it is straight from Github.

class AESCipher { [byte[]] $secret_key AESCipher() {} [string] secure_string_to_plain($secure_string) { $bstr = [System.Runtime.InteropServices.Marshal]::SecureStringToBSTR($secure_string) $plain = [System.Runtime.InteropServices.Marshal]::PtrToStringBSTR($bstr) [System.Runtime.InteropServices.Marshal]::ZeroFreeBSTR($bstr) return $plain } [string] padding($key) { # aes key length is defined 256bit # padding key if ($key.length -lt 32) { $key = $key + "K" * (32 - $key.length) }elseif ($key.length -gt 32) { $key = $key.SubString(0, 32) } return $key } [void] input_secret_key() { $input_string = Read-Host -Prompt "Enter your secret key" -AsSecureString $input_plain = $this.secure_string_to_plain($input_string) $enc = [System.Text.Encoding]::UTF8 $padded_key = $this.padding($input_plain) $key = $enc.GetBytes($padded_key) $this.secret_key = $key } [string] encrypt ($plain) { # plainpassword to securestring $secure_string = ConvertTo-SecureString -String $plain -AsPlaintext -Force # securestring to encrypted text $cipher = ConvertFrom-SecureString -SecureString $secure_string -key $this.secret_key return $cipher } [string] decrypt ($cipher) { # cipher text to secure string $secure_string = ConvertTo-SecureString $cipher -key $this.secret_key # secure string to plain text $plain = $this.secure_string_to_plain($secure_string) return $plain } }I observed that the intention was to provide a “secret key” or “master password” and then a 32 byte key would be derived from that and used to encrypt/decrypt the things inside the vault. As per usual, I got so focused on this that I failed to bring my head up and look around much at the bigger picture. I decided to just completely tax this class definition from greytabby and start working on the structure of my own password manager.

After a couple months of only sporadically working on this I hadn’t made much headway. A couple more class definitions, and some notes about desired functions, but I was finding myself busy with other things. Having a bit of a reputation around the shop as a Powershell nut I was asked one day to review a proposed solution provided by someone else. The request was to let some users do something on a server that requires administrative privilege, but not actually give them that privilege. The solution that was provided, via Powershell, was essentially to encrypt some admin credentials using a randomly generated AES key then create a script on the user’s computer that would know to retrieve the key file and cipher text from a restricted network share and then it would execute the tasks on the server as those credentials. While the logic was there, some of it was security through obscurity and ultimately it was just giving them admin credentials with extra steps. Anyone with access to the script file could see where the key file and encrypted text file were being store and go decrypt them at will if they like. It also meant that the keys to the castle would just be sitting on disk somewhere.

As I was calling these things out in my review I thought of the above class definition for an “AESCipher” and I thought *“oh hey, we could just tell the users some master password that they store securely in a password manager, and then they’d use that when they run the script and it would decrypt the saved credentials. Again, this was just giving them admin credentials with extra steps.

There was a benefit from this though because it got me looking at this class definition again and looking at specifically how he managed to take a provided password and generate the same AES key each time.

Password based key derivation, with extra steps

In the class definition let’s focus on this.

[String] Padding($Key) { # AES Key Length is defined 256bit # Padding Key if ($Key.Length -lt 32) { $Key = $Key + "K" * (32 - $Key.Length) } elseif ($Key.Length -gt 32) { $Key = $Key.SubString(0, 32) } return $Key } [void] InputSecretKey() { $InputString = Read-Host -Prompt "Enter your secret Key" -AsSecureString $InputPlain = $this.SecureStringToPlain($InputString) $Enc = [System.Text.Encoding]::UTF8 $PaddedKey = $this.Padding($InputPlain) $Key = $Enc.GetBytes($PaddedKey) $this.SecretKey = $Key }Knowing that we want to end up with a 32 byte key the InputSecretKey() method leverages the Padding($Key) method to add extra bytes to the provided secret. If we use the secret “TopSecret” for example, that’s 9 characters, which is good for 9 bytes if we convert it. That leaves 23 bytes we still need. What greytabby did was just an if/elseif statement: If the resulting key length from our master password is less than 32 bytes then add the byte value for “K” X amount of times. If It’s greater than 32 characters then only take the first 32 characters. The Byte array for “TopSecret” would look like this:

84,111,112,83,101,99,114,101,116,75,75,75,75,75,75,75,75,75,75,75,75,75,75,75,75,75,75,75,75,75,75,75Notice all those “75”s? That’s the UTF8 byte value for a capitol “K”. I had flashbacks to working on my Natural Language Password script where I was looking to make sure I used the best random number generator available to me. In that process I found myself on this article from Matt Graeber that went in to pretty good detail about the differences between the Powershell cmdlet Get-Random and the .NET method RNGCryptoServiceProvider. The part that stuck in my brain was “entropy.” I didn’t feel good about creating an AES key based on a password that was always going to have a bunch of repetitive byte values. Armed with even just that it would be significantly easier to brute force the original key.

I started thinking more about how to better derive 32 bytes of random key values given a provided string. Unfortunately my scratch .ps1 file where I was testing different ideas is lost, but I’ll try to summarize so you can laugh at me.

Knowing I didn’t want to just add some consistent character to the string to hydrate a 32 byte array full of values I thought about some different options. I could double a given master password, or triple it, or whatever until it reached 32 bytes. There would be too obvious of a pattern in that. Oh, well, what do most authentication systems do? They hash the password. Surely Powershell must have a way to generate hashes of strings. As it turns out, it does not. There’s a Get-FileHash cmdlet, but as the name implies it’s for files. So I wrote this function to create hashes of strings.

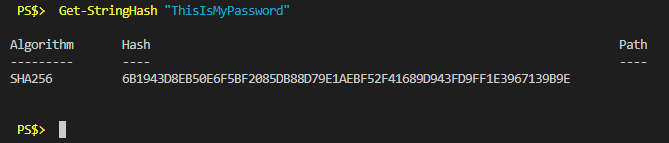

Function Get-StringHash { [Cmdletbinding()] Param ( [Parameter(Mandatory = $true, Position = 0, ValueFromPipeline = $true)] [String]$InputString, [Parameter(Mandatory = $false, Position = 2)] [ValidateSet("SHA1","SHA256","SHA384","SHA512","MACTripleDES","MD5","RIPEMD160")] [String]$Algorithm = "SHA256" ) Process { $Stream = [IO.MemoryStream]::New([byte[]][char[]]$InputString) $Output = Get-FileHash -InputStream $Stream -Algorithm $Algorithm $Stream.Dispose() } End { $Output } }The use would look something like this.

A SHA256 hash results in a 64-byte output string, every time, and it’s completely unique. Awesome, I’d solved it. But, I only needed 32 bytes so I decided to just use every other byte for a total of 32.

Pretty soon I had a function worked up to convert a supplied password (passphrase) in to a unique AES Key. But then that word started nagging me again. Entropy. Had I really created a function that would generate a key as unique as a randomly generated one? I decided that I should modify Matt Graeber’s work from the Powershell Magazine article and measure the entropy of randomly generated 32 byte arrays, and then my password derived byte arrays.

Again, the Powershell work I wrote to test this is gone and I don’t really feel like recreating it, but the gist is: I would use my Natural Language Password script to generate 1000 unique passphrases and I would generate 1000 unique randomly generated 32 byte keys. I would then compare the entropy of each method’s 1000 iterations and average the results. A random key was generating an average entropy calculation of 4.88. My function was somewhere around 3.42. I realized that hashstrings don’t include every possible character and therefore couldn’t produce every possible byte value. I tinkered with multiplication, Get-Random seeding, other hash algorithms, and a couple of other things but the highest I got my entropy number to was something like 3.62. I wasn’t happy. I searched the internet for something like “AES Key password based” and one of the results was “PBKDF2” or “Password Based Key Derivation Function.”

There I go again, not seeing the forest for the trees. Of course something like PBKDF2 exists, how else would be get unique keys for password managers, VPN connections, etc. A little bit of searching later and I had found a .NET method for leveraging PBKDF2 and when I tested it for entropy I was getting 4.88, just like the randomly generated keys.

PBKDF2 in Powershell

Focusing on the idea of an end user providing a master password, and turning that in to a unique 32 byte key, I set to work on a function for accomplishing this. Here’s the final product so we can go through it.

Function ConvertTo-AESKey { [cmdletbinding()] Param ( [Parameter(Mandatory = $true, Position = 0)] [System.Security.SecureString]$SecureStringInput, [Parameter(Mandatory = $false)] [String]$Salt = '|½ÁôøwÚ♀å>~I©k◄=ýñíî', [Parameter(Mandatory = $false)] [Int32]$Iterations = 1000, [Parameter(Mandatory = $false)] [Switch]$ByteArray ) Begin { # Create a byte array from Salt string $SaltBytes = ConvertTo-Bytes -InputString $Salt -Encoding UTF8 } Process { # Temporarily plaintext our SecureString password input. There's really no way around this. $Password = ConvertFrom-SecureStringToPlainText $SecureStringInput # Create our PBKDF2 object and instantiate it with the necessary values $PBKDF2 = New-Object Security.Cryptography.Rfc2898DeriveBytes -ArgumentList @($Password, $SaltBytes, $Iterations, 'SHA256') # Generate our AES Key $Key = $PBKDF2.GetBytes(32) # If the ByteArray switch is provided, return a plaintext byte array, otherwise turn our AES key in to a SecureString object If ($ByteArray) { $KeyOutput = $Key } Else { # Convert the key bytes to a unicode string -> SecureString $KeyBytesUnicode = ConvertFrom-Bytes -InputBytes $Key -Encoding Unicode $KeyAsSecureString = ConvertTo-SecureString -String $keyBytesUnicode -AsPlainText -Force $KeyOutput = $KeyAsSecureString } } End { return $KeyOutput } }With this function, if you provide the same password, you’ll get the same very unique, seemingly random, 32 byte key out of it. It can be reproduced on any computer with this same function.

The information for the .NET “Rfc2898DeriveBytes” method wasn’t hard to find, and all of the other articles surrounding PBKDF2 seemed to make sense. You need to provide a clear text password, a salt (in byte form), the number of iterations (1000 is standard) and the hashing algorithm. You can see in the above code I provided the salt statically. While this is exposed, it’s mostly to prevent against rainbow table attacks so this is seen as acceptable. There are a couple of help functions called out in here that I wrote along with this:

ConvertTo-Bytes ConvertFrom-Bytes ConvertFrom-SecureStringToPlainText

These just make it easier to read what’s happening but they’re all essentially using some .NET class in the background.

For security sake the derived key is then converted to a SecureString object using the standard DPAPI encryption and then returned.As an example, if I was to feed the password “password” in to the above function the resulting byte values would be

58,17,32,1,253,255,156,186,174,118,20,201,237,59,75,81,38,137,247,12,31,34,162,127,17,116,183,247,85,27,246,10I now had a function that would deal with collecting the master password from the user, and a function for converting that in to a unique AES Key using PBKDF2. As well as some helper functions. Now it’s time to encrypt some stuff.

Encrypting strings

I’ve covered how to protect string data with SecureString objects and AES encryption, and that’s exactly how I started this. I’d generate a unique key using my ConvertTo-AESKey function and then I would convert the supplied unprotected text to a SecureString object, convert it from a SecureString object with my AES Key and then output the resulting cipher text.

I did notice a bit of a pattern though when looking at some example text. Consider the following code and assume the $Key variable has a key in it already

$Words = @("test","test2","stuff","things") Function Protect { Param ( $InputObject ) $SecureString = ConvertTo-SecureString $InputObject -AsPlainText -Force $CipherText = ConvertFrom-SecureString $SecureString -Key $Key $CipherText } $Words | %{ Protect $_ }The output text looks like this

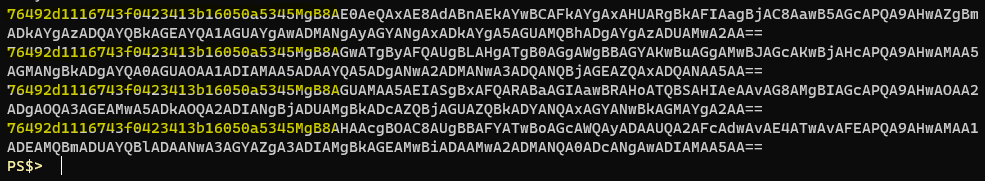

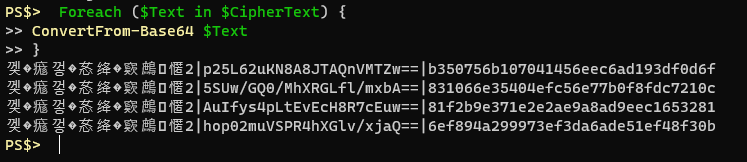

I’ve highlighted some repeating text observed at the beginning of each example. I tried to find more information about how exactly ConvertFrom-SecureString operates with regards to AES encryption but I couldn’t find much out there. The output text is all base64 encoded, and decoding it offers only a little extra info.

Loading the cipher text in to an array called $CipherText and doing a foreach loop on the array with a quick and dirty ConvertFrom-Base64 function you can see there’s a bit of a pattern. Some bytes of indiscernible value, a pipe, another Base64 string, a pipe and likely our encrypted text. No matter what I encrypt the first string of bytes seems to be the same. The Base64 string in the middle changes every time, even if you’re encrypting the same plain text with the same AES key. I’m thinking this is the initialization vector that ConvertFrom-SecureString uses with each iteration. Then the last string after the pipe must be our encrypted data.

In my searches though for how to properly leverage AES encryption on strings in Powershell I ran across this blog by Richard Ulfvin. He did a really nice job of going through how to use the .NET classes to protect strings with AES encryption.

I quickly refactored my current method to use what he shared and found that the returned cipher text is exactly what I dictated be output: the first 16 bytes were the initialization vector, followed by my encrypted data.

Putting together Richard’s method and adding a small function to handle the invocation of the .NET AES Crypto provider I ended up with this.

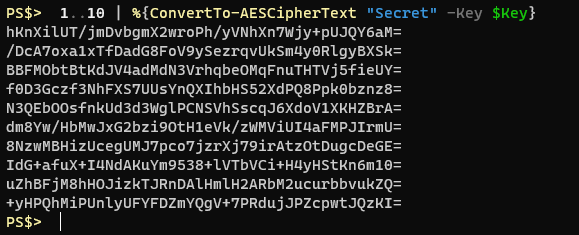

Function ConvertTo-AESCipherText { <# .Synopsis Convert input string to AES encrypted cipher text #> [cmdletbinding()] param( [Parameter(ValueFromPipeline = $true,Position = 0,Mandatory = $true)] [string]$InputString, [Parameter(Position = 1,Mandatory = $true)] [Byte[]]$Key ) Process { $InitializationVector = [System.Byte[]]::new(16) Get-RandomBytes -Bytes $InitializationVector $AESCipher = Initialize-AESCipher -Key $Key $AESCipher.IV = $InitializationVector $ClearTextBytes = ConvertTo-Bytes -InputString $InputString -Encoding UTF8 $Encryptor = $AESCipher.CreateEncryptor() $EncryptedBytes = $Encryptor.TransformFinalBlock($ClearTextBytes, 0, $ClearTextBytes.Length) [byte[]]$FullData = $AESCipher.IV + $EncryptedBytes $ConvertedString = [System.Convert]::ToBase64String($FullData) } End { $AESCipher.Dispose() return $ConvertedString } }Example output from that function would look like this.

Note that encrypting the exact same string, with the same key, 10 times produces completely different cipher text. This is what true encryption should look like. Notice it’s also a bit more succinct than the ConvertFrom-SecureString method since it doesn’t have that mystery text at the beginning.

A Module takes shape

At this point I knew I wanted to be able to collect a master password from the user, derive a unique key from that, store it somewhere within the session for recurring use, and then encrypt and decrypt strings with it. It might also be nice to be able to export the unique key to a file and similarly import it from a file. This would allow you to provide a super long, complex, hard to memorize password, and still save the resulting key somewhere. This would be very similar in function to just randomly generating an AES key and saving the key to a file somewhere.

It would be nice to be able to check if the master password has been set. Remove it if needed, and also set it to a desired master password. For public facing functions that sets me up with:

Export-MasterPassword Import-MasterPassword Get-MasterPassword Set-MasterPassword Protect-String Remove-MasterPassword Unprotect-StringOn the private side of things however there’s a quite a few more things at play. I’ll list them and then talk about a few of them:

Clear-AESMPVariable ConvertFrom-AESCipherText ConvertFrom-Bytes ConvertFrom-SecureStringToPlainText ConvertTo-AESCipherText ConvertTo-AESKey ConvertTo-Bytes Get-AESMPVariable Get-DPAPIIdentity Get-RandomBytes Initialize-AESCipher New-CipherObject Set-AESMPVariableSet, Get and Clear AESMPVariable are all about storing the key in a global session variable. I was picturing myself importing a CSV to a variable and then encrypting certain properties from that CSV before writing it back out to a CSV file. I wouldn’t want to have to supply the same master password every time I performed this operation. The only thing I could think of so far was a global scope session variable. You can manually protect the data by running the “Remove-MasterPassword” function which will clear out the variable. In the future I may find a way to add a time-based limit on it, but my efforts towards that so far have been failures.

ConvertFrom-SecureStringToPlainText, while horribly named, is straight forward. It shows you the plaintext from a SecureString object by decrypting DPAPI protection.

A lot of the other functions are just pretty wrappers on a terse call to a .NET class. ConvertTo and ConvertFrom AESCipherText are all about performing that encryption and decryption operation described above using the supplied key from the master password.

Shortly after proving the function of most of my functions I had the thought that maybe, just maybe, I might (or someone else might) want to use the DPAPI encryption rather than AES. I decided that I would format my protected string output to conform to that of an object. The object would have two properties: Encryption type, and cipher text. The Encryption type would either be DPAPI or AES, and then the cipher text property would hold the encrypted text. Time for a class definition.

Class CipherObject { [String] $Encryption [String] $CipherText hidden[String] $DPAPIIdentity CipherObject ([String]$Encryption, [String]$CipherText) { $this.Encryption = $Encryption $this.CipherText = $CipherText $this.DPAPIIdentity = $null } }This leads me in to talking about the Get-DPAPIIdentity function. Since DPAPI’s key is based on the user and the system I thought it might be handy to add that information to the output. That way if you attempt to decrypt DPAPI protected strings on another system, or as a different user, the error message could say who originally protected it.

If you attempt to decrypt AES protected text you’ll just get an error message stating that the key was incorrect and it will wipe out the currently saved master password.

Demo

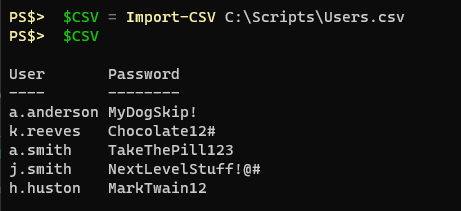

Let’s say we had a CSV file with some sensitive information in it, like usernames and passwords, and the CSV looked like this.

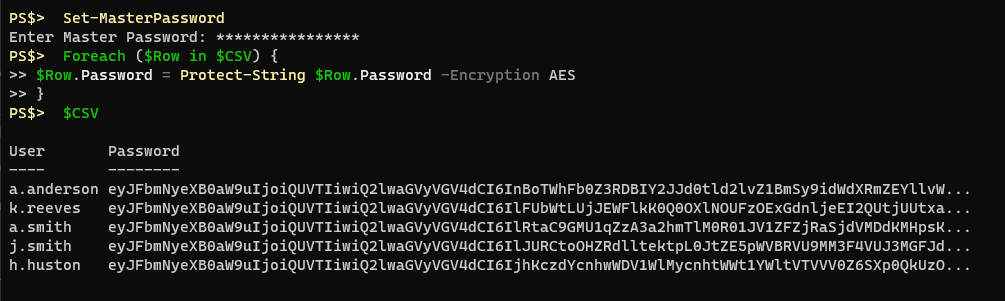

With the ProtectStrings module imported I can set my master password I want to use for encryption/decryption and then I can loop through this CSV and protect the sensitive information.

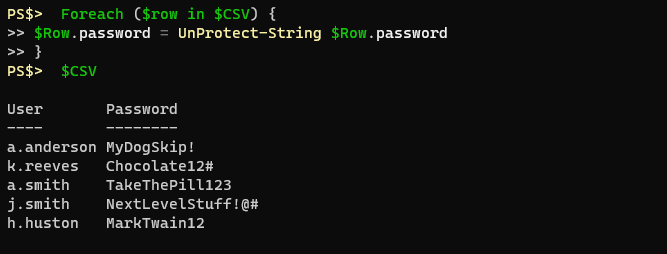

Then I can Export-CSV from that variable and safely write that information to disk. I can transport it to other computers, or other accounts, and when I reach my destination I can load the ProtectStrings module, set my master password, import the CSV, and loop through to decrypt the strings.

Here’s an example using the DPAPI encryption, which is currently the default if you don’t specify.

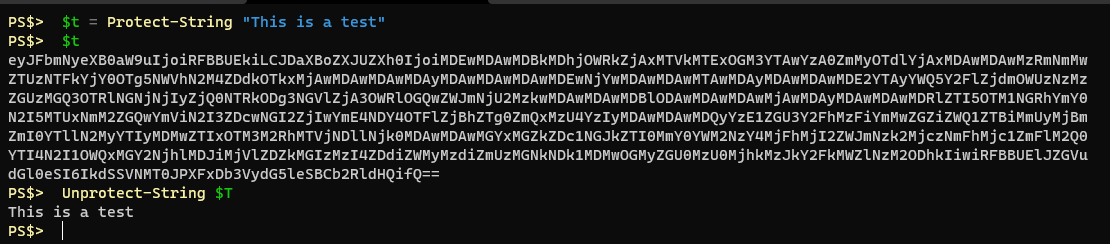

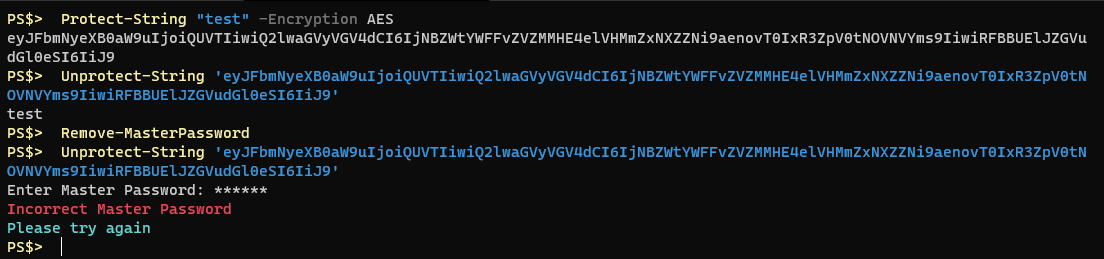

Here, I protect a string using AES encryption, then I clear the master password from the session and attempt to decrypt the same string again. I intentionally provide an incorrect master password, resulting in an error

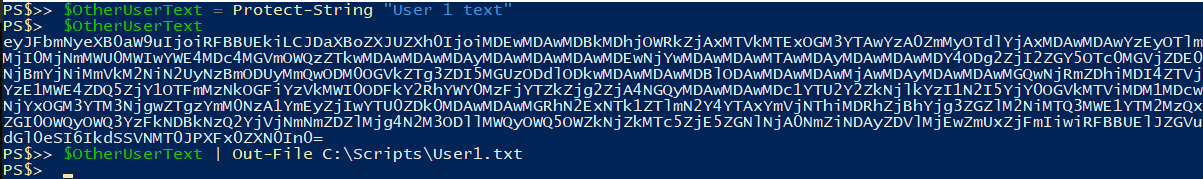

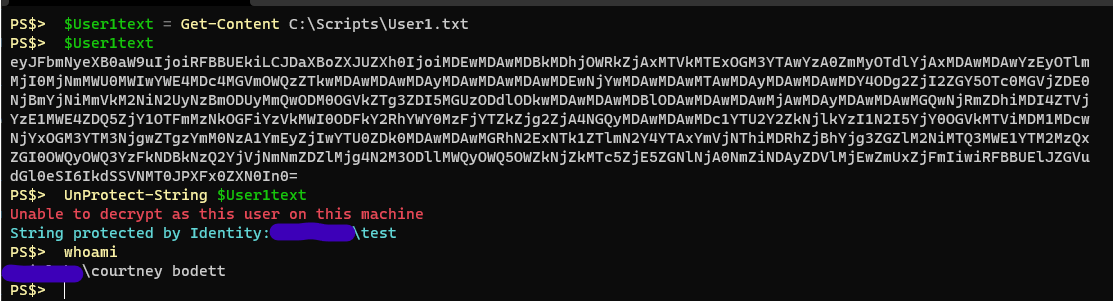

If I use a different user account, or different PC, to protect a string using the default DPAPI encryption and then save that output to a file and transfer it to another user you will see that the decryption fails because I am not the same user.

Conclusions

Powershell is still fun and I still learn something new every day. Ultimately this module may not be very practical or have a lot of use, but it was a good exercise in writing functions and writing modules.

It’s not currently published anywhere as of writing this. I’m still using it internally and I have a specific project in mind where it could be helpful. This will help me iron out the kinks and eventually I’ll publish it to my repository on Github.

I’m not much of a cryptographer so if you see any glaring flaws please feel free to email me. Or if you have questions you may do the same.

Be good everyone, or be good at it.

-

Powershell all of the things. And more logging

“If all you have is a hammer, everything looks like a nail” - Abraham Maslow.

I use a variation of this quote a lot, and I typically use it in jest, but it’s also fairly true. I’m more than willing to admit when there is a better solution than trying to write a Powershell script. But I do love writing Powershell and often make the argument that since we predominately use Windows, it makes sense to script things via Powershell. I recently had occassion to script something in Powershell to automate a task. While the purpose of the script was to simplify a routine operation, I took it as an opportunity to leverage my in development logging module.

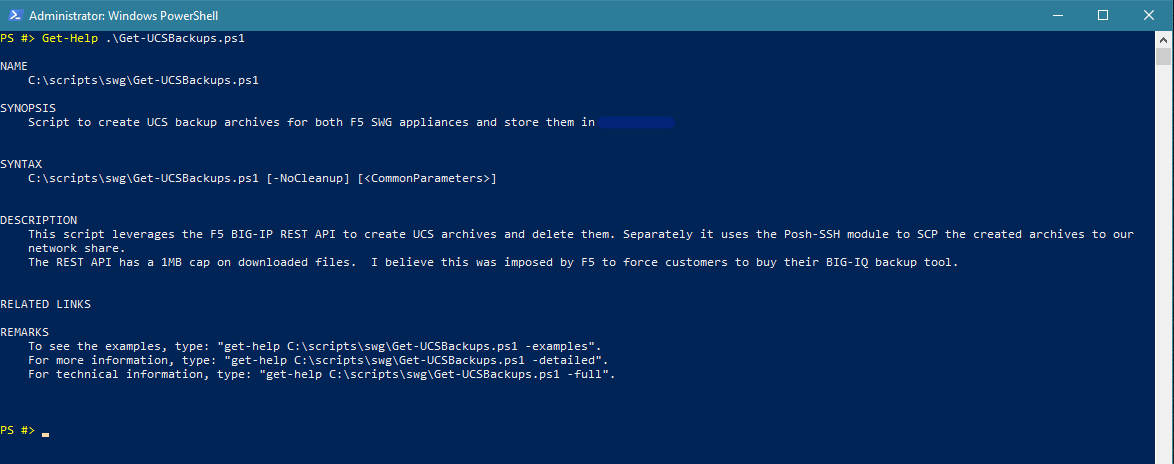

The Need

I recently learned that virtual F5 BIG-IPs should never be snapshot via a hypervisor as it can cause processes to stall out and high available clusters to fail over. Instead, F5 recommends that you create a configuration backup called a “UCS.” This is typically done with the web GUI and can then be downloaded from there and stored for safe keeping. Of course my first thought when learning this was “we can do that with Powershell.” I looked to see if F5’s BIG-IP had a REST API, and they did. Invoke-RestMethod to the rescue! Unfortunately I would say that F5’s documentation about their API leaves a little bit to be desired, especially concerning their UCS backups. I couldn’t find any examples of people using Powershell to automate the creation and download of UCS backups.

that being said, getting connected to the F5 BIG-IP with Invoke-RestMethod wasn’t too bad and you can authenticate with the built-in -Credential parameter and a PSCredential object. Like this:

$Uri = "https://$IP/mgmt/tm/sys/ucs" $Headers = @{ "Content-Type" = "application/json" } $UCSResponse = Invoke-RestMethod -Method GET -Headers $Headers -Uri $Uri -Credential $F5CredsThe request above will return some objects, stored in $UCSResponse, and among the properties you can get information about the current UCS archives on the appliance. I parse some of this information like this:

$CurrentUCS = Foreach ($UCSFile in $UCSResponse.items.apirawvalues) { [PSCustomObject]@{ Filename = $UCSFile.filename.split('/')[-1] Version = $UCSFile.version InstallDate = $UCSFile.install_date Size = $UCSFile.file_size Encrypted = $UCSFile.encrypted } }The operation for creating a new UCS archive looks like this:

$Headers = @{ "Content-Type" = "application/json" } $Body = @{ name = "$BackupName" command ="save" } $Response = Invoke-RestMethod -Method POST -Headers $Headers -Body ($Body | ConvertTo-Json -compress) -Uri $Uri -Credential $F5Creds -ContentType 'application/json'That’s really all there is to it. You hit the right URL, pass the API commands and options in the body of the request, and authenticate with a PSCredential object. However, there is no documentation for how to download the resulting UCS backup. They cover where it’s located on the machine, and there is another API call for downloading files from the BIG-IP however when I worked through that I found out the file download ended up only being 1MB instead of 300+MB.

Digging around some more I found another piece of F5 documentation that stated that their API for file downloads is capped at 1MB. This feels like an intentional move on F5’s part to push customer’s towards buying their BIG-IQ backup appliance for managing these things. Some members on their forum pointed out that F5’s own Python SDK can handle downloading a UCS archive, and it’s ultimately using the same API, so off I went to Github to read some Python. Turns out they built a loop in to their file download function that downloads the files in .5MB chunks while streaming it to a file. I also saw comments on Github that this is reportedly very slow.

Put the hammer down

I was about two hours in to writing my own version of this particular Python method in Powershell when I took a break and explained to a friend what I was doing. They looked at me as if I had told them I thought the CD-ROM tray was a cup holder. Once I looked up from what I was doing for a moment I realized I shouldn’t try to work within F5’s constraints and instead just Secure Copy (SCP) the file off the box. I’ve used the PoSH-SSH module before for SSH/SCP/SFTP functionality, and while I try to write scripts with little to no dependencies this seemed like a worthwhile inclusion.

I put a “Sanity Checks” section near the beginning of my script and this is where I verify prerequisites. Checking for PoSH-SSH looks like this:

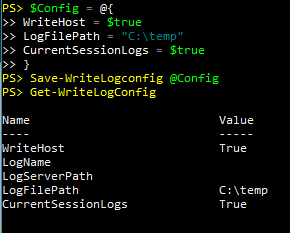

if (-not(Test-path "C:\Program Files\WindowsPowerShell\Modules\posh-ssh")){ Write-Host "Posh-SSH is needed to connect to SCP. Please install and try again" -ForegroundColor Red Exit } # import the Posh-SSH module for making an SCP connection Import-Module -Name 'Posh-SSH' -Scope LocalSince this is a script and not a function I feel comfortable with using “Exit” to terminate script execution. Another thing I import is my in-development logging module. I’ve still been leveraging the module on the daily in conjunction with another module that’s really just a collection of daily-use functions. This script represented an opportunity to take advantage of good logging for auditing and troubleshooting purposes since this script could be ran daily or weekly. I also wanted the script to log to a local file on my computer as well as a file on a network share, something I had envisioned from the onset of the WriteLog module.

I decided to try importing the module via a literal path:

$PathToLogging = "C:\Scripts\Modules\WriteLog" if (-not(Test-Path $PathToLogging)) { Write-Host "Logging Module not found at $PathToLogging" -ForegroundColor Red Write-Host "Please modify the PathToLogging variable and try again" -ForegroundColor Yellow Exit } Else { Import-Module -Name $PathToLogging }For brevity I’ve shown the variable and its use in one snippet. With the logging available I can start using module functions to record and display output.

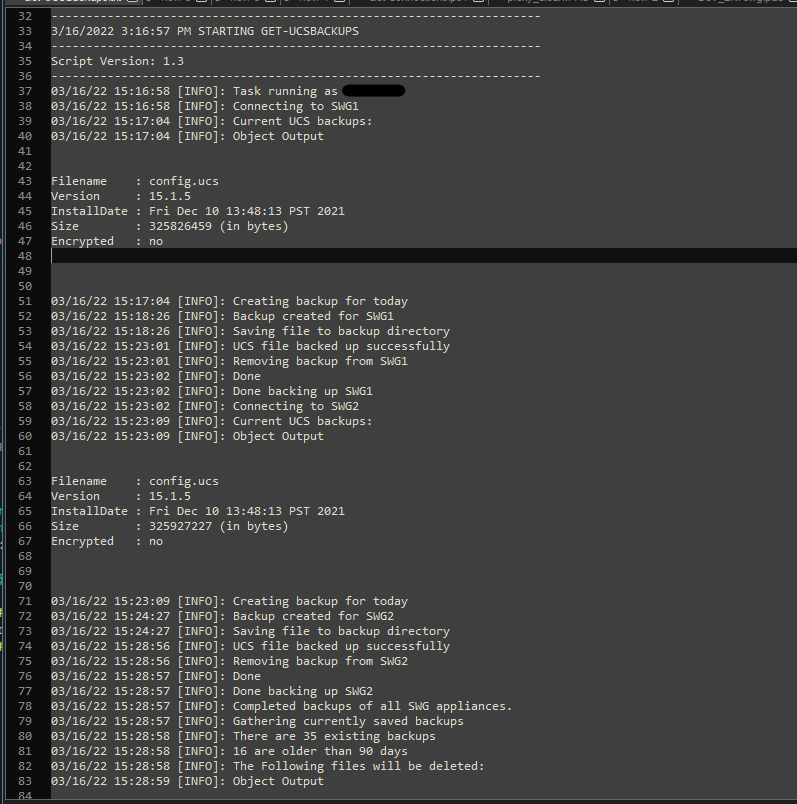

Set-WriteLogConfig -LogFilePath $LogDir -LogServerPath $NetworkLogPath -WriteHost Start-Log -ScriptVersion "1.3" Write-LogEntry "Task running as $env:username"This starts off by establishing a set of variables related to logging activities: The local file path, network file path, whether or not to display the information to the host, log to a global variable, and the logname. Some of this information is determined automatically and as you can see some is provided with parameters. Then I can start the log entry with “Start-Log” which just puts a header of sorts in the log file and in this case includes the script version. This way if I’m looking back through the logs and see a different behavior and notice that it was version “1.2” instead maybe that will help me correlate.

For the rest of the script I’ll use “Write-LogEntry” and “Write-LogObject” to log information as well as display it to the host. What the console sees is exactly what gets logged to file.

Add-Content vs Out-File

There’s some pretty good pages out there that cover the difference between the Add-Content and Out-File cmdlets. I can’t honestly remember my decision process early on. I was originally using Out-File in all my logging functions until I did a Get-Help on Add-Content and saw that the -Path parameter would accept an array of objects. I thought this would be really handy for dynamically providing the destination for logging. It could be a single local file, or as many local files and network files as you wanted to put in the array. The actual code in Write-LogEntry for writing data to a file would only have to be one line in that case and you could manage the destination as a variable. You can see how this originally tuned out in my previous post where I outlined how must of this functioned.

I’ve been using it with Add-Content for months now without issue, but I’ve only been logging to a local file. For this backup script I wanted to log locally and to a network file. I immediately saw red text upon testing complaining that a “stream was unreadable” or something like that. File locks appeared to be the issue and all of my Google-fu was telling me that Out-File had better file lock handling. A quick refactor of my Write-Log functions and my errors were gone. Instead of a one-liner I came up with this instead for Write-LogEntry:

Foreach ($FileDestination in $Destination){ Out-File -FilePath $FileDestination -Encoding 'UTF8' -Append -NoClobber -InputObject ('{0} {1}: {2}' -f $OutputObject.Timestamp,$OutputObject.Severity,$OutputObject.LogObject) -ErrorAction Stop }The end result is the same on the console and in the text file, and at least so far, it doesn’t seem to be much slower. This was a good usecase for testing my logging module. There were a couple of other little tweaks too but we don’t need to go in to them in this post.

Execute

Now that logging had been sorted out, and all the other functional pieces were in place we could execute the script. A quick Get-Help shows that there is only one parameter and it’s an override to skip removing older backups.

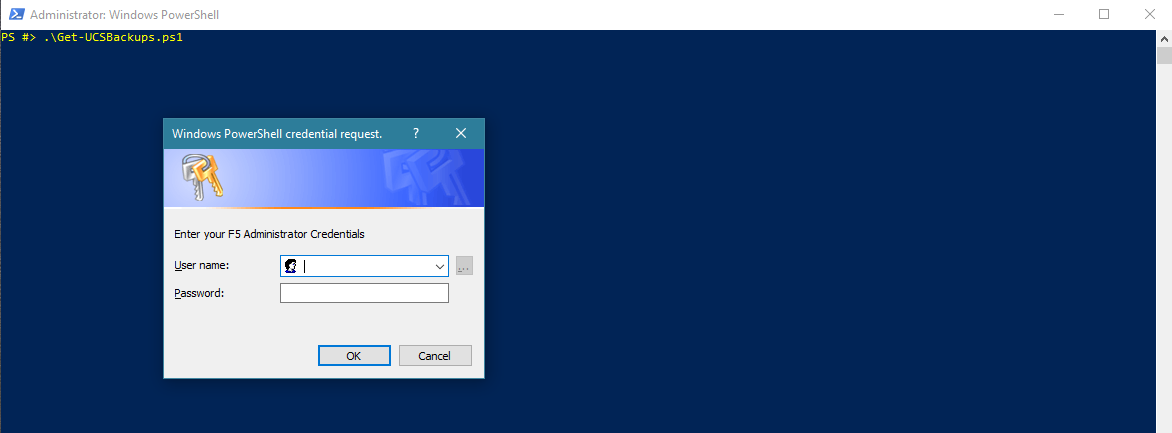

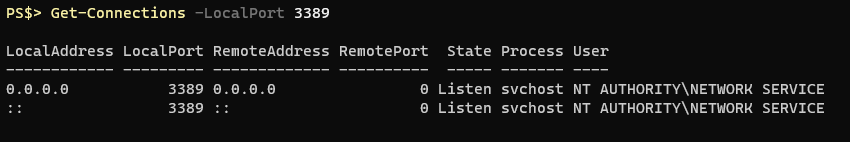

The first thing that happens after executing the script is a request for credentials. This is for authenticating against the F5 BIG-IP, for both the web API and SCP.

If I wanted to run this script as a scheduled task I’d need to secure those F5 credentials and make them available to the account executing the scheduled task.

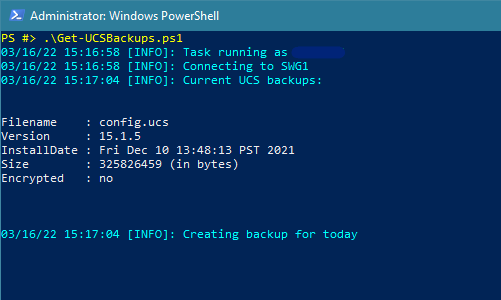

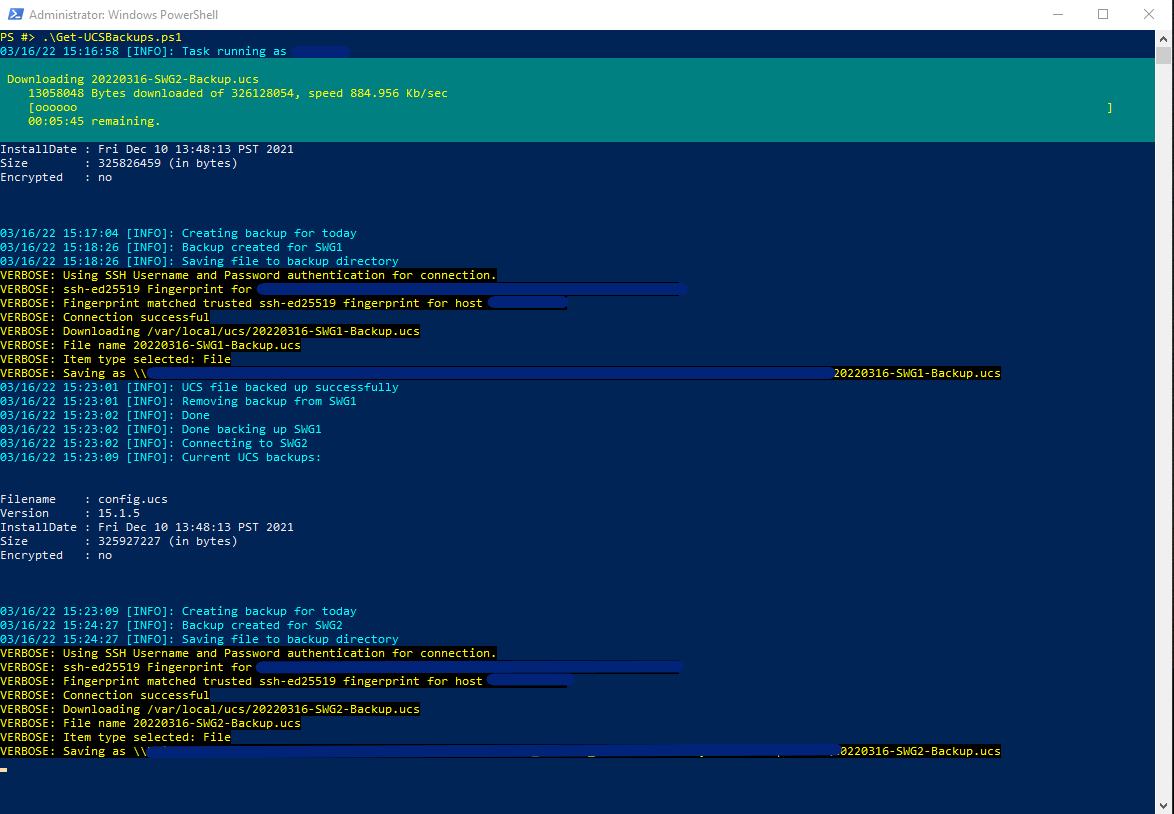

The script connects to the first F5 appliance we’re backing up and shows a list of the current UCS backup files present on the machine. Then it sends the API call to create a new UCS backup. This can take a moment or so:

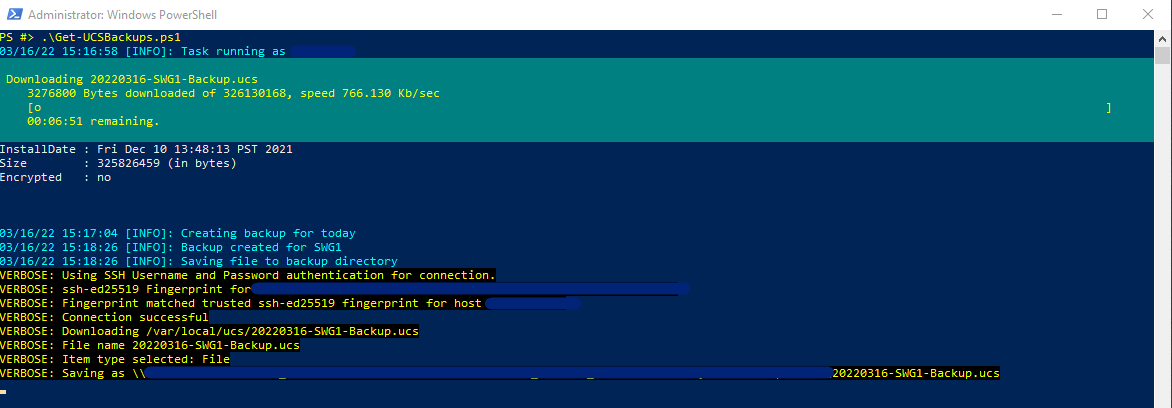

Once the backup is created the script moves on to using SCP to copy the file off the F5 appliance and to a network share. Thanks to the developers of the PoSH-SSH module there’s a nice progress bar while you wait for this to complete. I also called the cmdlet in the script with the -Verbose switch for extra information:

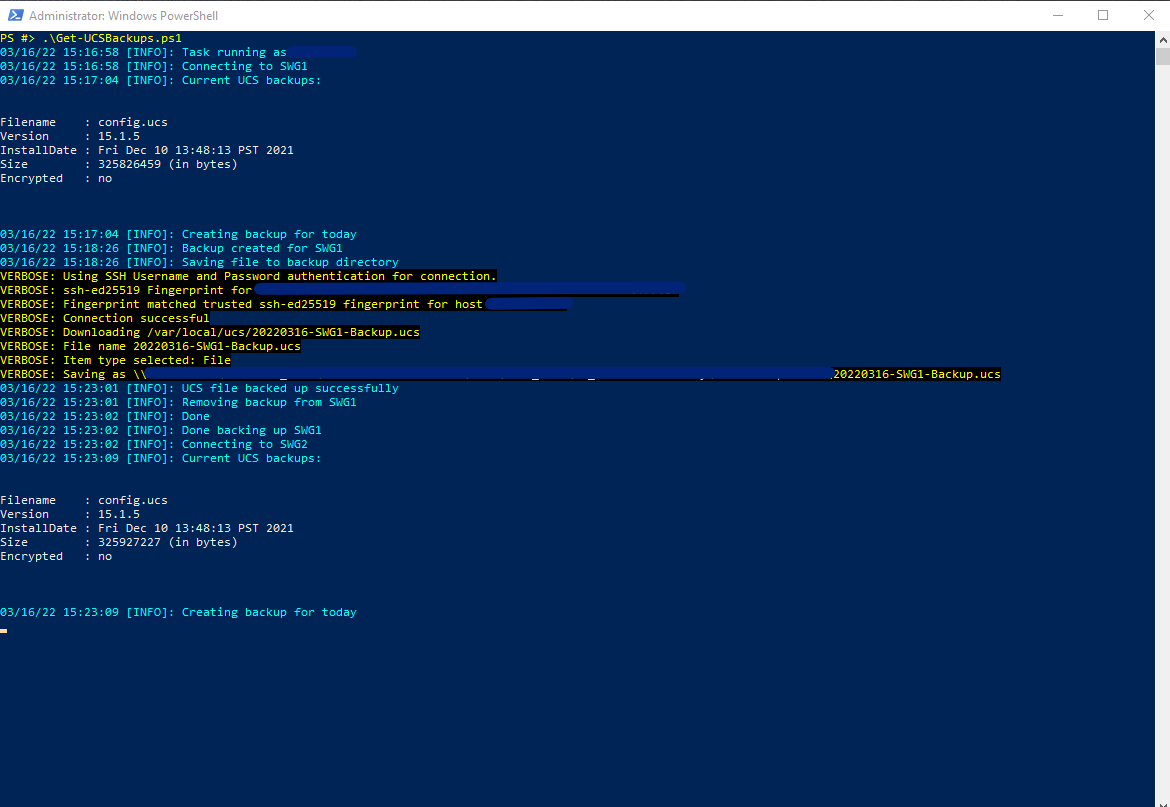

Loops through and does the next appliance:

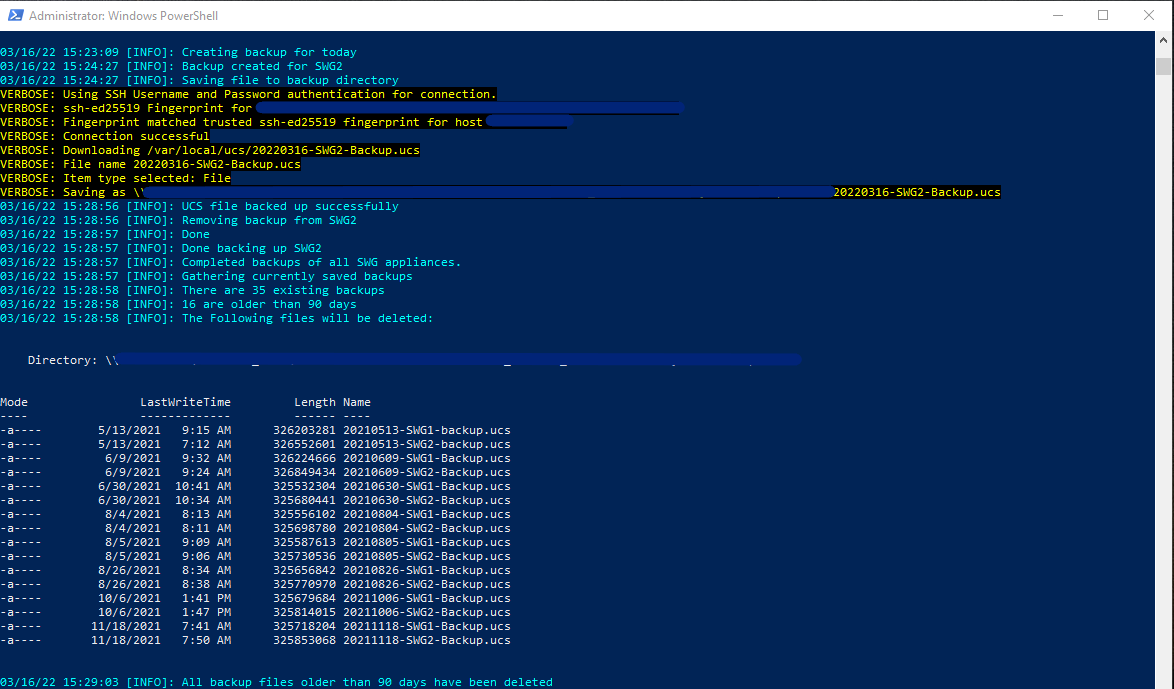

The tail end of the script deals with the backup destination directory. It get’s all of the *.ucs files, and references anything that’s older than 90 days. It then shows you these files (thereby logging it as well) and then removes them:

Here’s a snippet of one of the log files to show that it looks just like the console output:

Conclusion

The end script is about 200 lines for something that could probably be done in less than 20 (not including the logging module). However, this should be fairly robust, transferable to other team mates, and includes really good logging so that I or others can audit the operation and troubleshoot any problems. Also, I learned why people recommend Out-File over Add-Content so often. Consequently, Out-File also outputs Powershell objects the way they are seen on the console when writing to a file, whereas the *-Content cmdlets do not. So actually I’ve been using Out-File in my Write-LogObject function from the get-go to capture object output the same way it’s seen on the console. Maybe that should have been a clue.

-

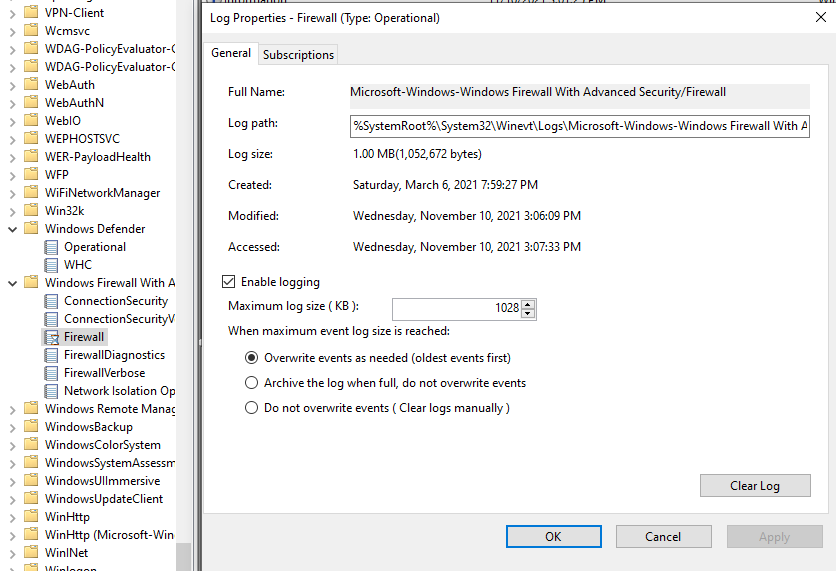

Get-WindowsFirewallBlocks

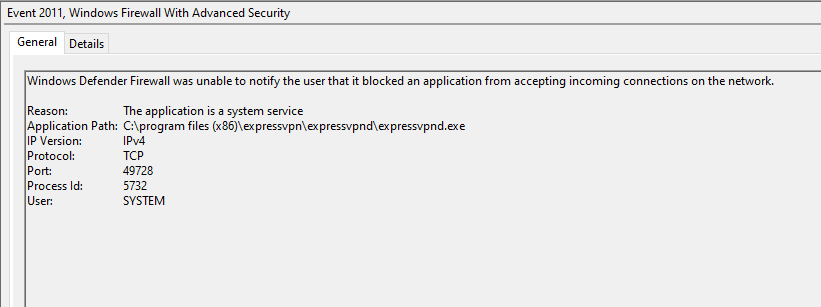

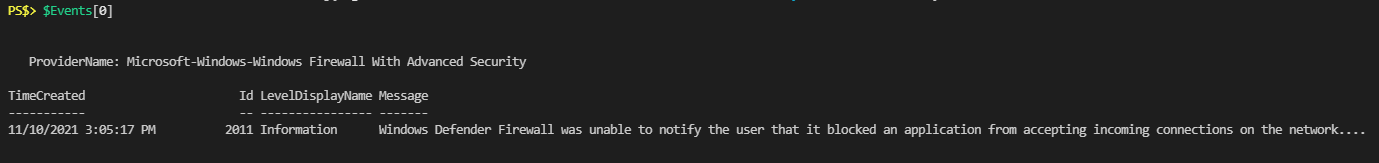

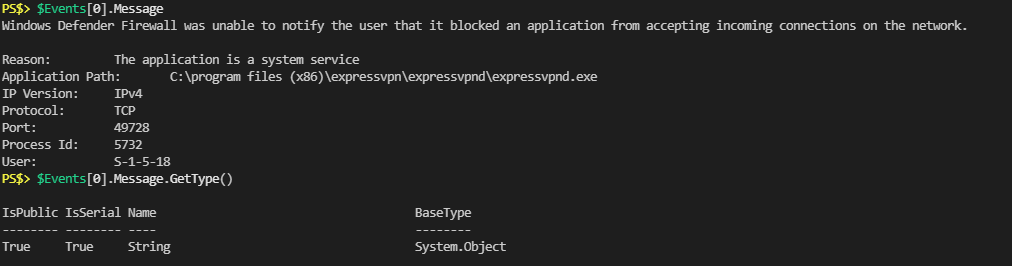

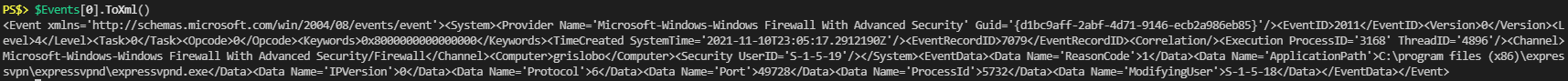

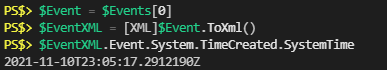

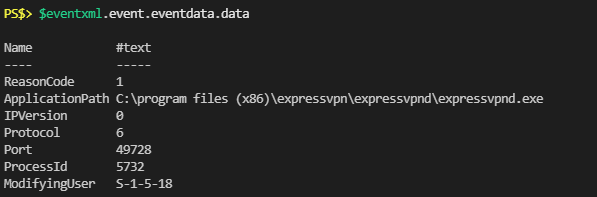

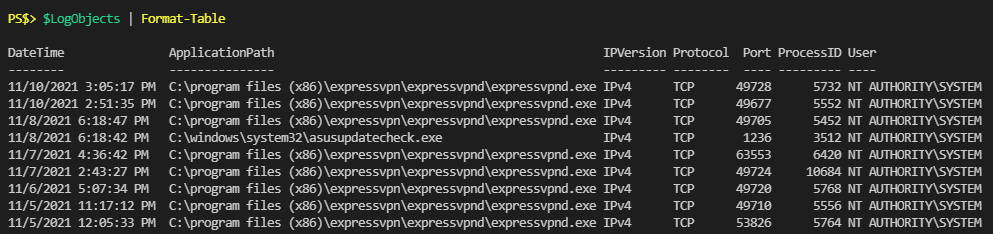

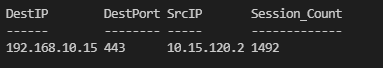

Introduction